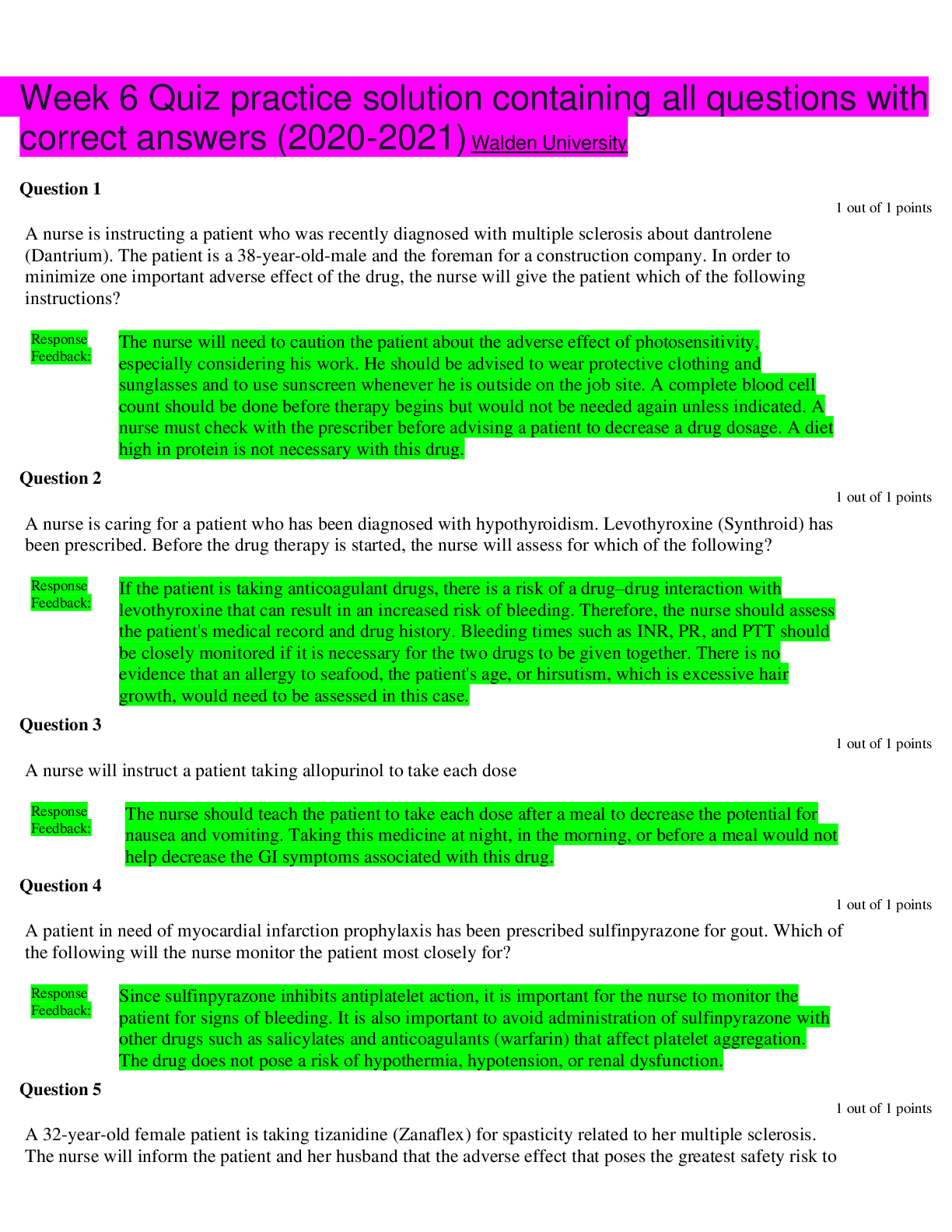

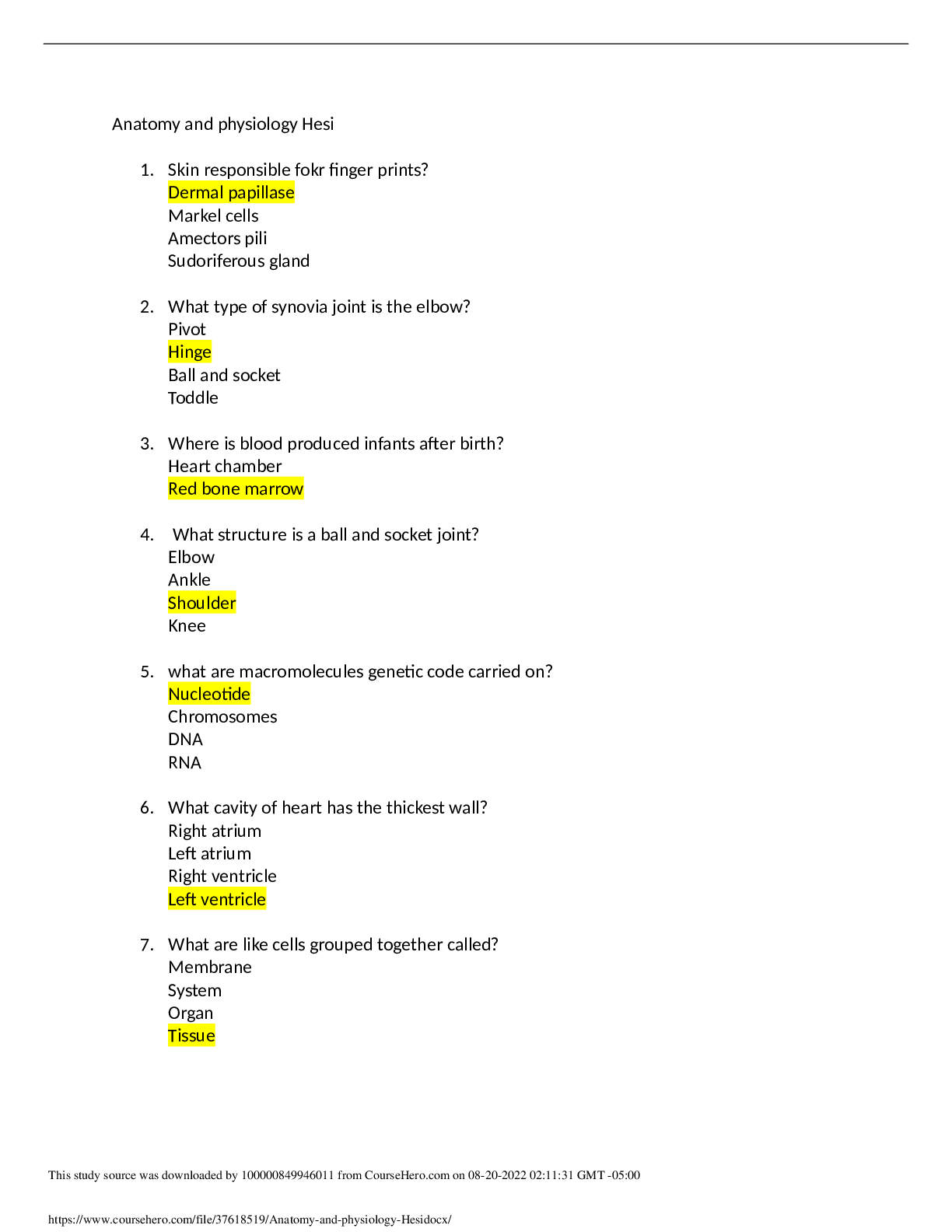

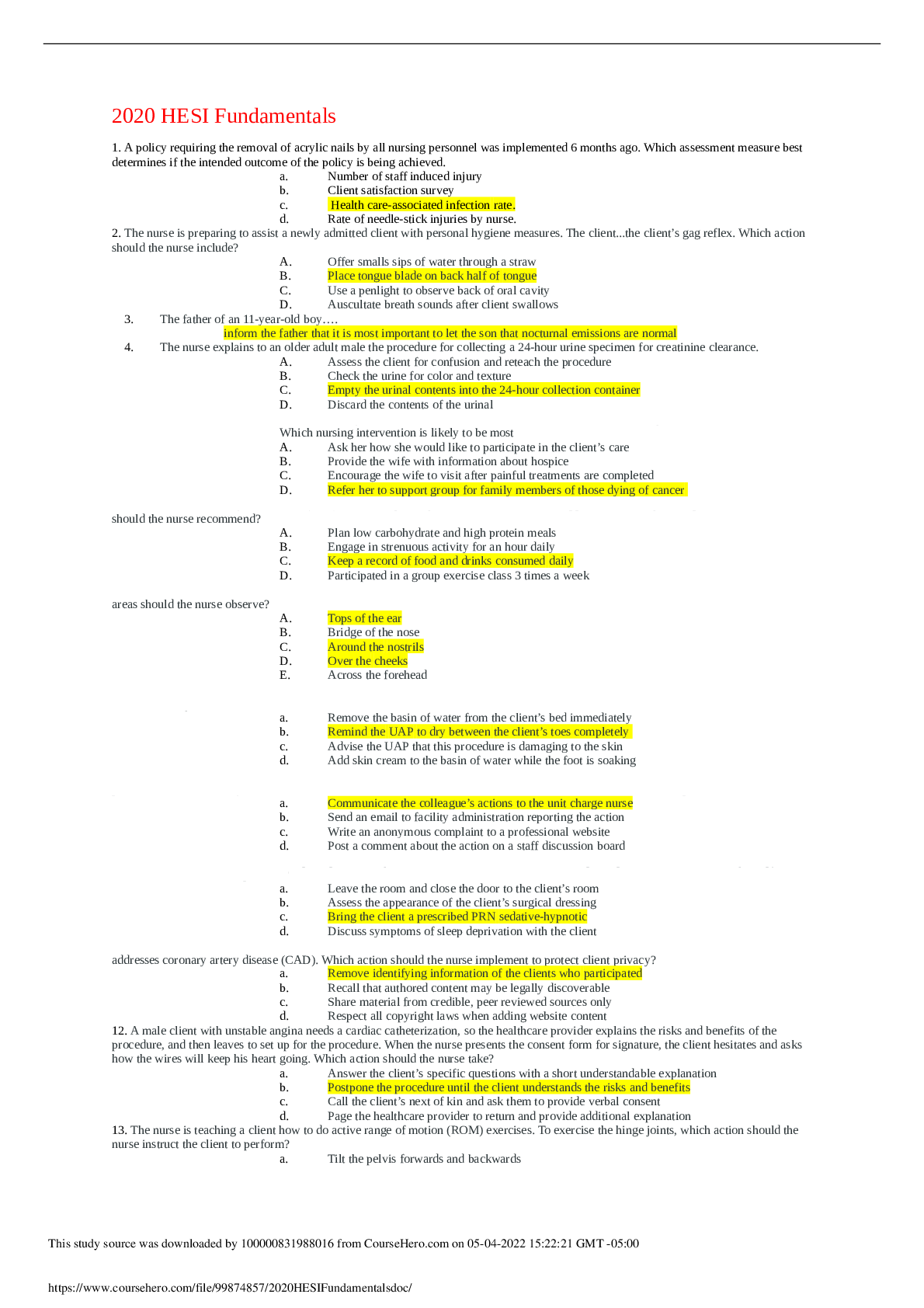

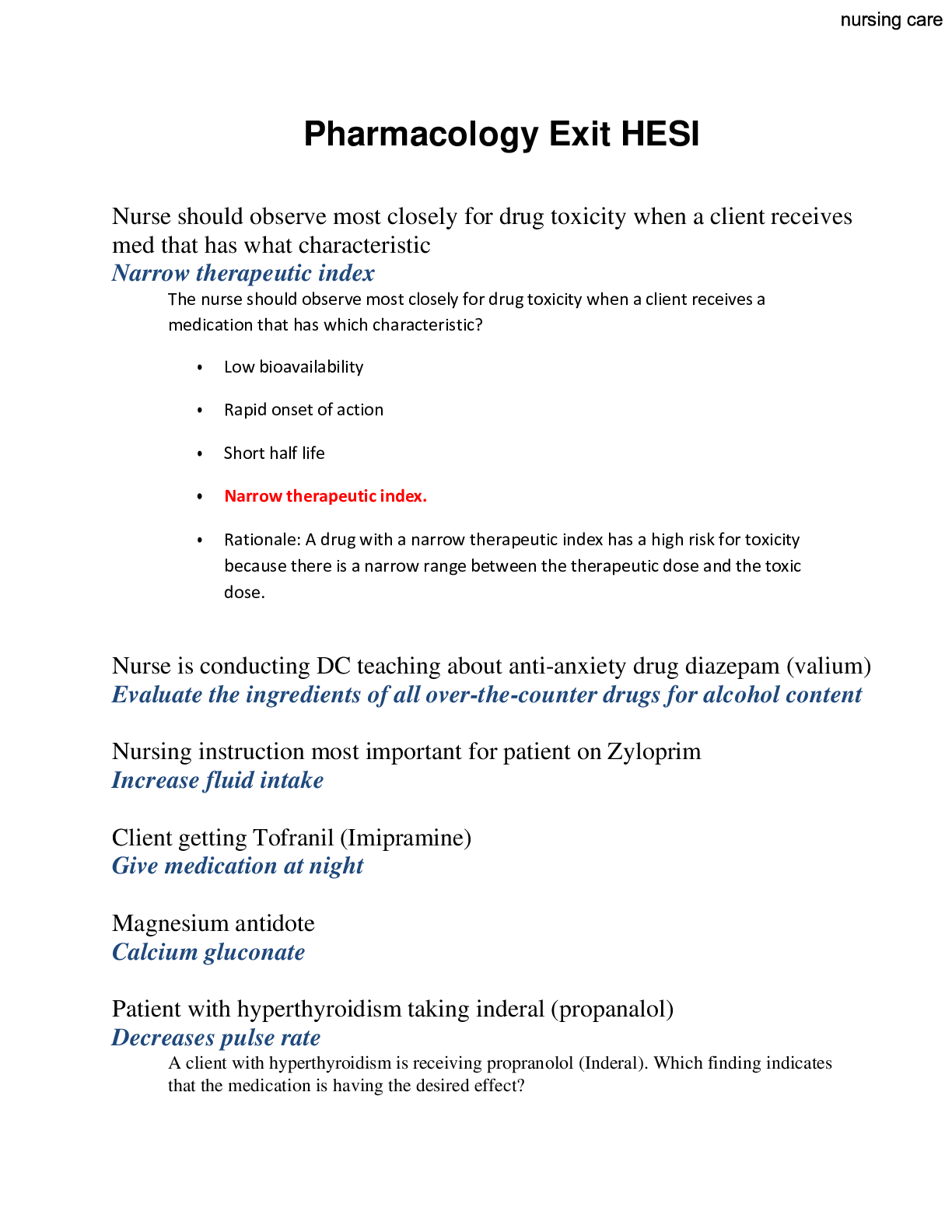

Health Care > QUESTIONS & ANSWERS > Practice exam 378/378 questions with approved answer sheet updated docs (All)

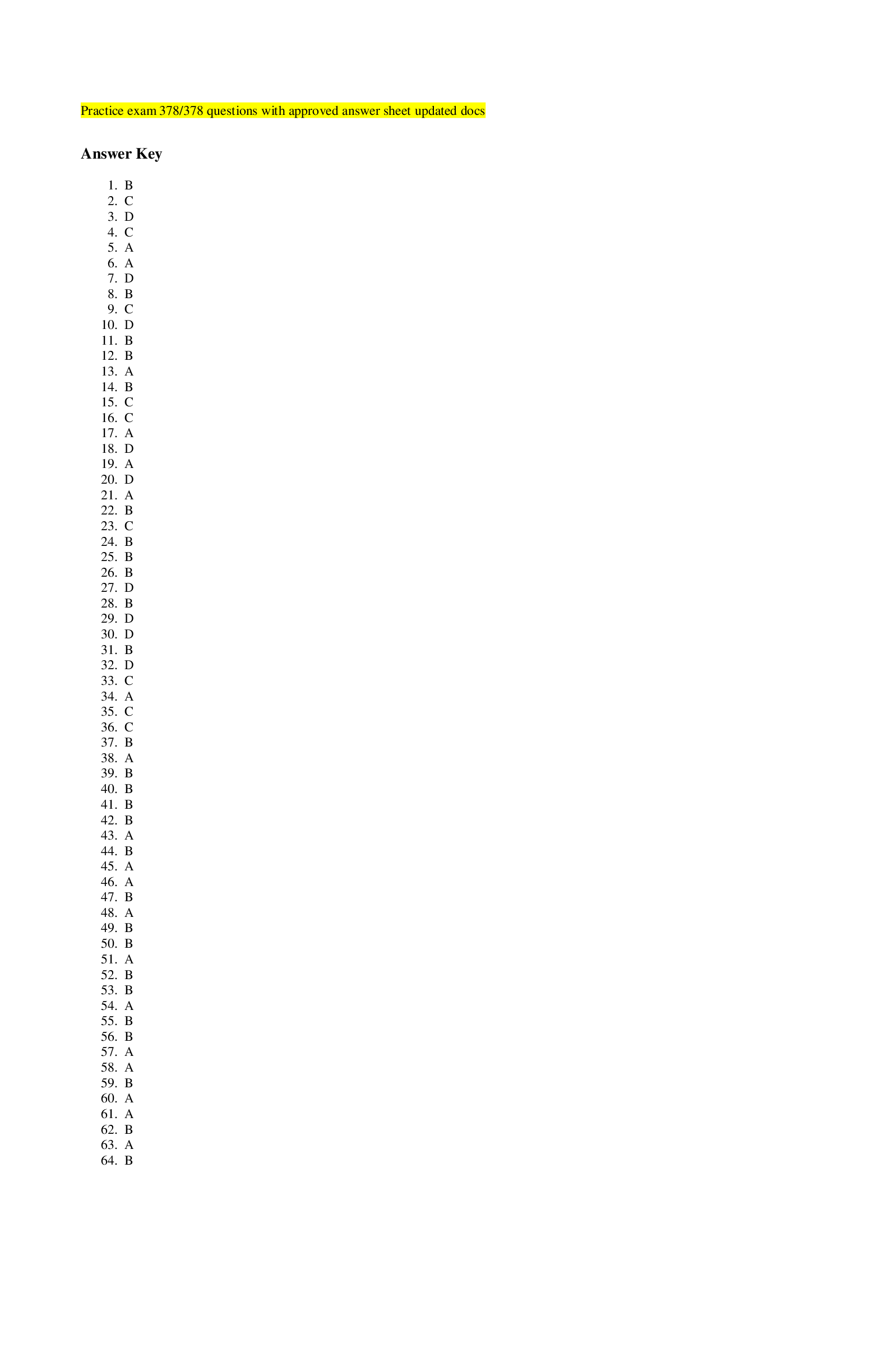

Practice exam 378/378 questions with approved answer sheet updated docs

Document Content and Description Below