Computer Science > EXAM > CS 229 midterm exam 2015 Stanford University (All)

CS 229 midterm exam 2015 Stanford University

Document Content and Description Below

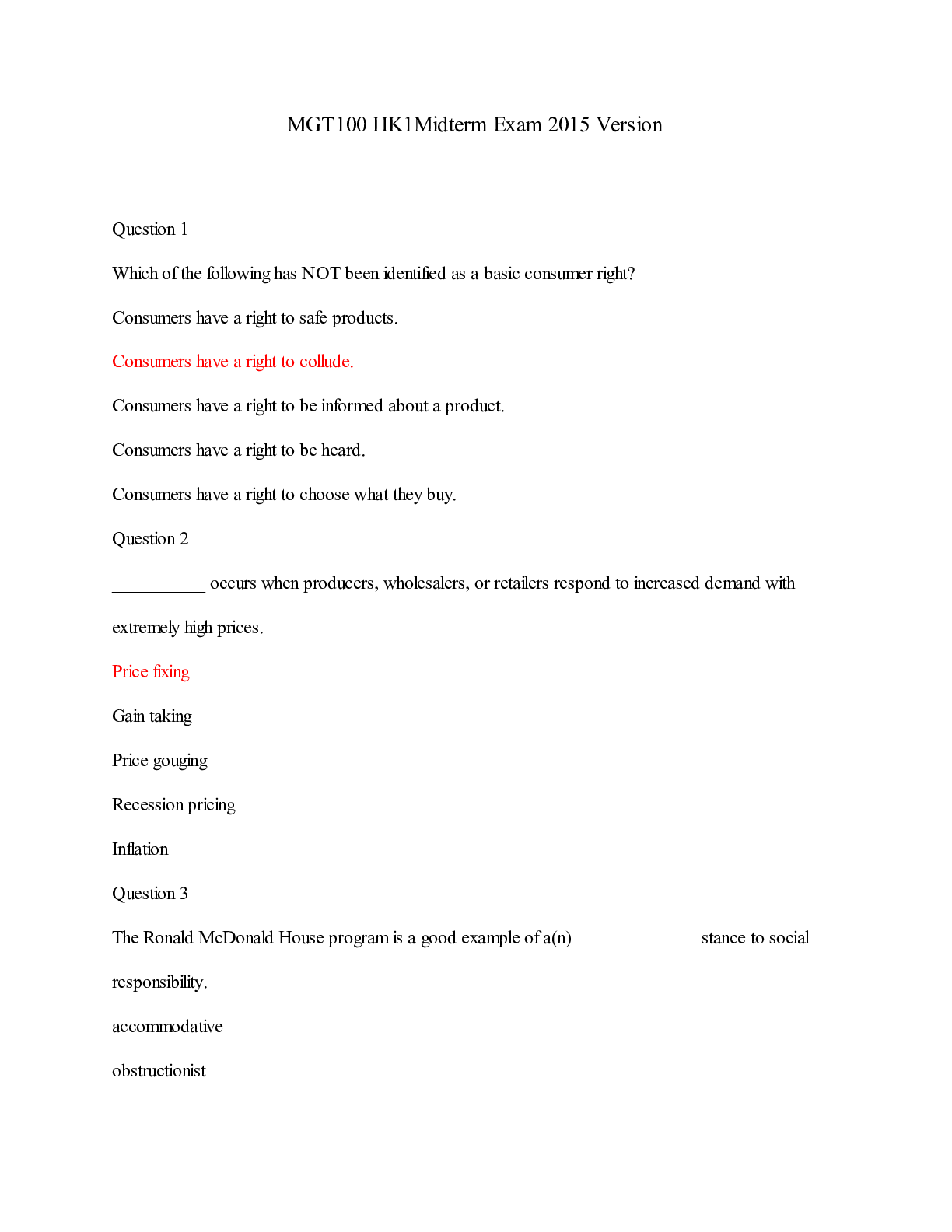

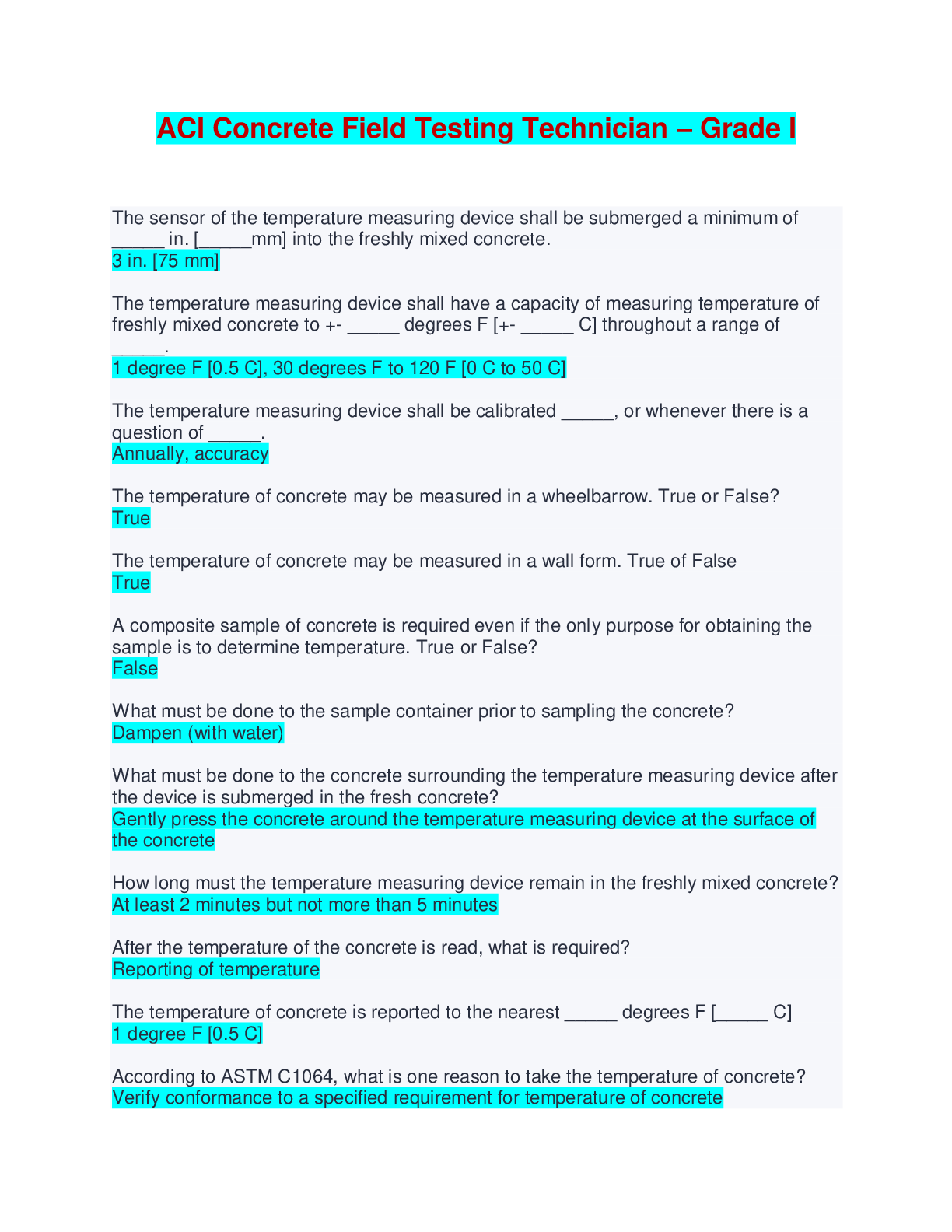

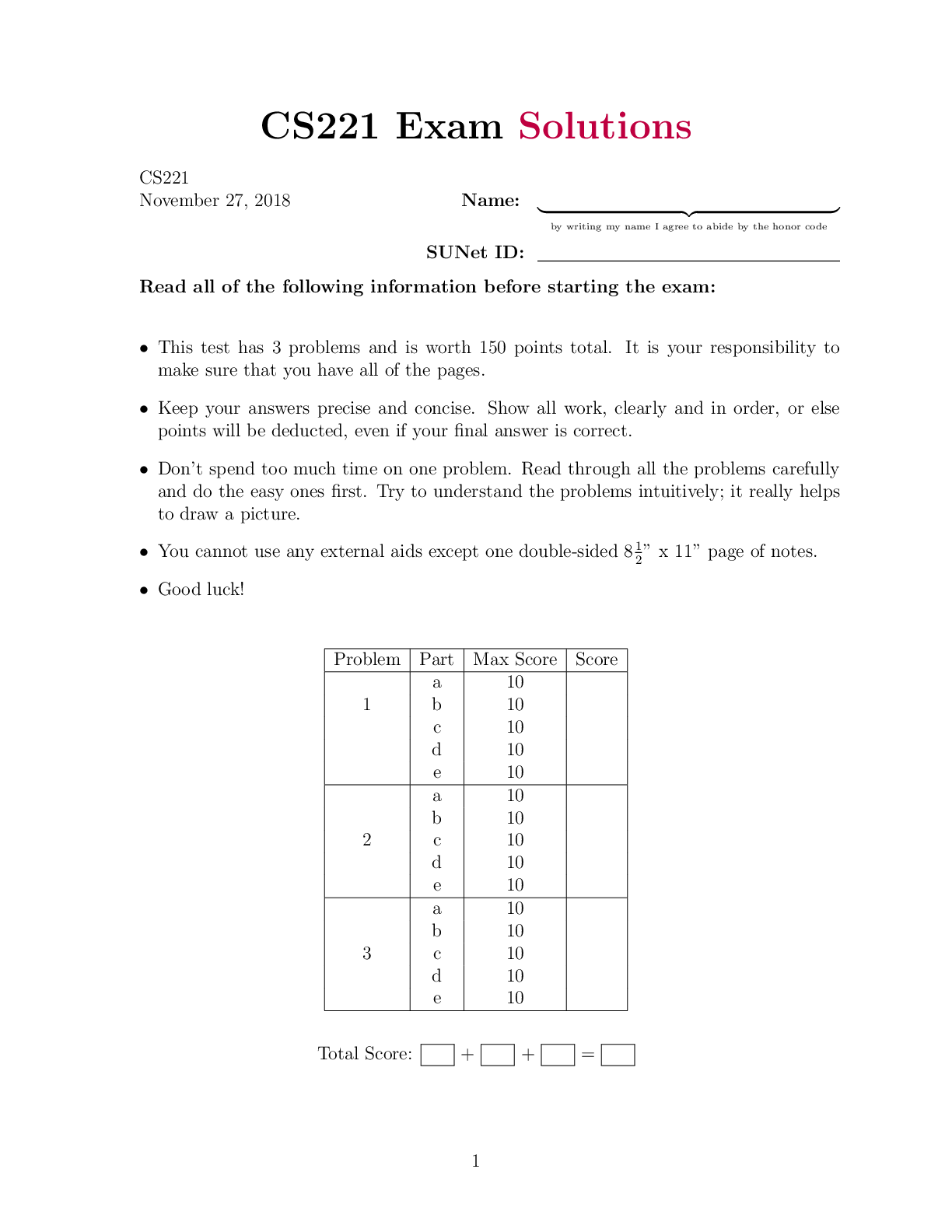

STANFORD UNIVERSITY CS 229, Autumn 2015 Midterm Examination Wednesday, November 4, 6:00pm-9:00pm Question Points 1 Short Answers /26 2 More Linear Regression /10 3 Generalized Linear Models /17... 4 Naive Bayes and Logistic Regression /17 5 Anomaly Detection /15 6 Learning Theory /15 Total /100 Name of Student: SUNetID: @stanford.edu The Stanford University Honor Code: I attest that I have not given or received aid in this examination, and that I have done my share and taken an active part in seeing to it that others as well as myself uphold the spirit and letter of the Honor Code. Signed:CS229 Midterm 2 1. [26 points] Short answers The following questions require a reasonably short answer (usually at most 2-3 sentences or a figure, though some questions may require longer or shorter explanations). To discourage random guessing, one point will be deducted for a wrong answer on true/false or multiple choice questions! Also, no credit will be given for answers without a correct explanation. (a) [6 points] Suppose you are fitting a fixed dataset with m training examples using linear regression, hθ(x) = θTx, where θ; x 2 Rn+1. After training, you realize that the variance of your model is relatively high (i.e. you are overfitting). For the following methods, indicate true if the method can mitigate your overfitting problem and false otherwise. Briefly explain why. i. [3 points] Add additional features to your feature vector. Answer: False. More features will make our model more complex, which will capture more outliers in the training set and overfit more. ii. [3 points] Impose a prior distribution on θ, where the distribution of θ is of the form N (0; τ2I), and we derive θ via maximum a posteriori estimation. Answer: True. By imposing a prior belief on the distribution of θ, we are effectively limiting the norm of θ, since larger norm will have a lower probability. Thus, it makes our model less susceptible to overfitting.CS229 Midterm 3 (b) [3 points] Choosing the parameter C is often a challenge when using SVMs. Suppose we choose C as follows: First, train a model for a wide range of values of C. Then, evaluate each model on the test set. Choose the C whose model has the best performance on the test set. Is the performance of the chosen model on the test set a good estimate of the model’s generalization error? Answer: No it is not because C will be selected using the test set, meaning that the test set is no longer separate from model development. As a result, the choice of C might be over-fit to the test set and therefore might not generalize well on a new example, but there will be no way to figure this out because the test set was used to choose C. (c) [11 points] For the following, provide the VC-dimension of the described hypothesis classes and briefly explain your answer. i. [3 points] Assume X = R2. H is a hypothesis class containing a single hypothesis h1 (i.e. H = fh1g) Answer: V C(H) = 0. The VC dimension of a single hypothesis is always zero because a single hypothesis can only assign one labeling to a set of points.CS229 Midterm 4 ii. [4 points] Assume X = R2. Consider A to be the set of all convex polygons in X . H is the class of all hypotheses hP (x) (for P 2 A) such that hP (x) = (1 if 0 otherwise x is contained within polygon P Hint: Points on the edges or vertices of P are included in P Answer: V C(H) = 1. For any positive integer n, take n points from A. Suppose we place the n points fx1; x2; :::; xng uniformly spaced on the unit circle. Then for each of the 2n subsets of this data set, there is a convex polygon with vertices at these n points. For each subset, the convex polygon contains the set and excludes its complement. Therefore, 8n, the shattering coefficient is 2n and thus the VC dimension is infinite. iii. [4 points] H is the class of hypotheses h(a;b)(x) such that each hypothesis is represented by a single open interval in X = R as follows: h(a;b)(x) = (1 if 0 otherwise a < x < b Answer: V C(H) = 2. Take for example two points f0; 2g. We can shatter these two points by choosing the following set of intervals for our hypotheses f(3; 5); (−1; 1); (1; 3); (−1; 3)g. These correspond to the labellings: f(0; 0); (1; 0); (0; 1); (1; 1)g. We cannot shatter any set of three points fx1; x2; x3g such that x1 < x2 < x3 because the labelling x1 = x3 = 1; x2 = 0 cannot be realized. More generally, alternate labellings of consecutive points cannot be realized [Show More]

Last updated: 1 year ago

Preview 1 out of 27 pages

.png)

Also available in bundle (1)

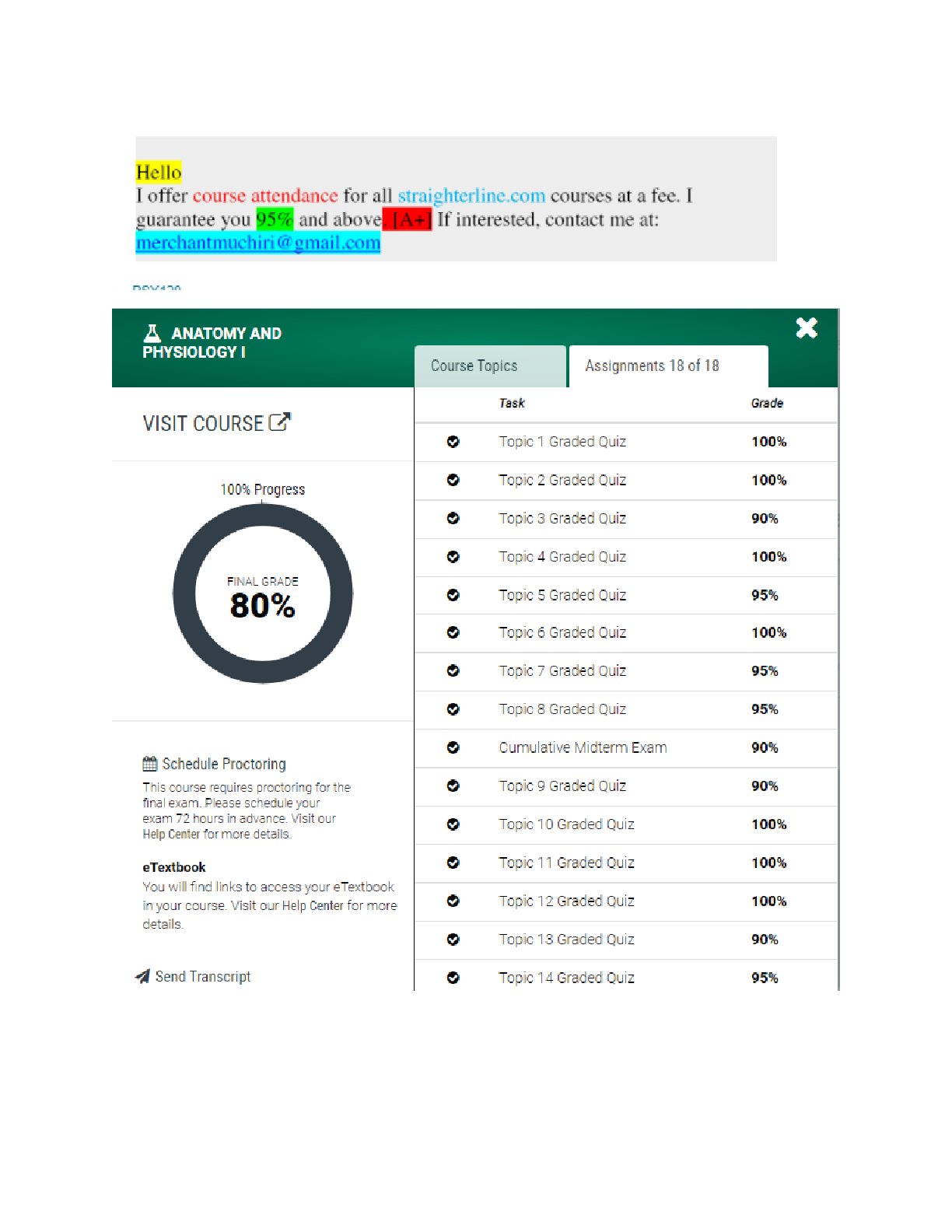

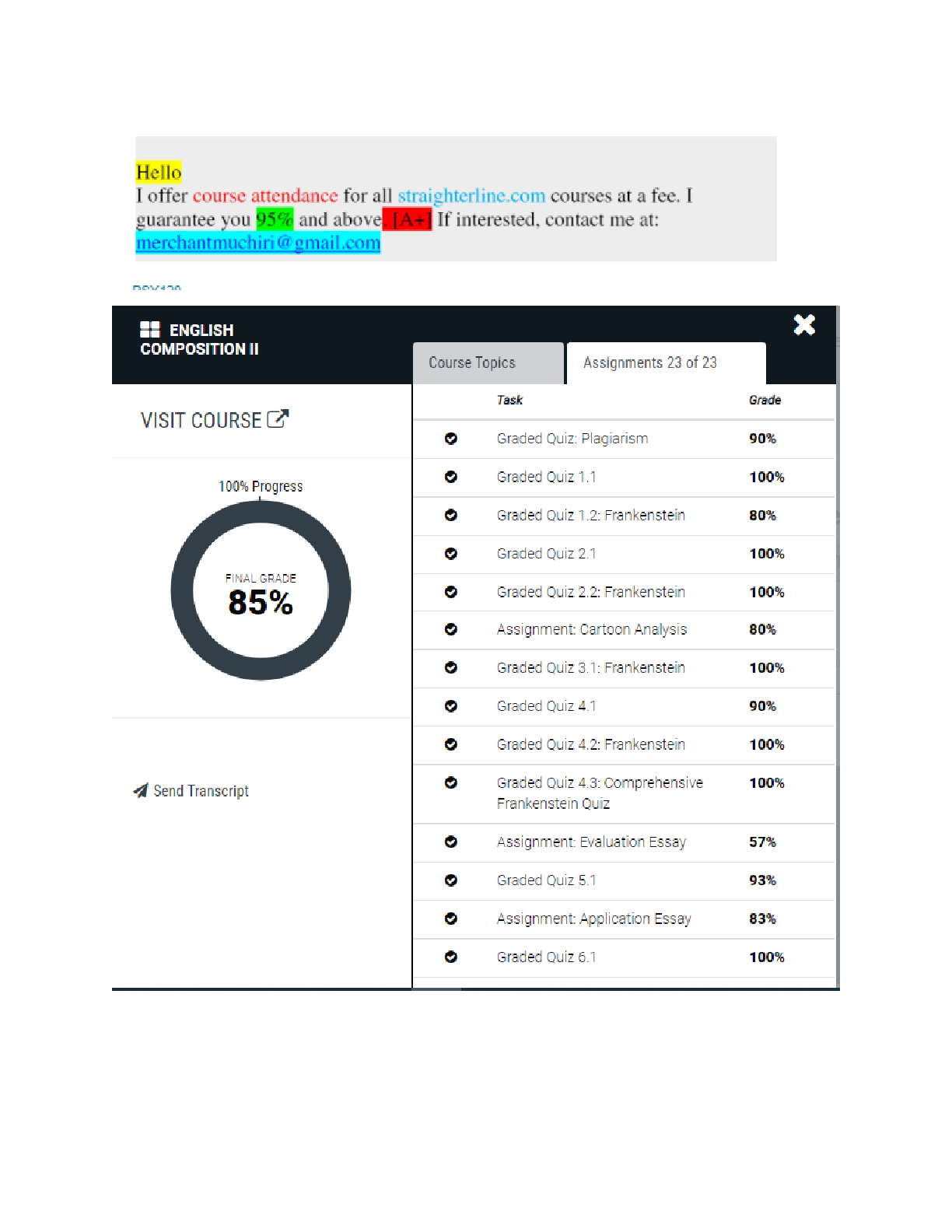

Stanford University CS 221 exams (2014, 2015, 2016, 2017, 2018) , aut2018-exam, midterm2015, Midterm Spring 2019

Stanford University CS 221 exams (2014, 2015, 2016, 2017, 2018) , aut2018-exam, midterm2015, Midterm Spring 2019

By Muchiri 3 years ago

$25

8

Reviews( 0 )

Document information

Connected school, study & course

About the document

Uploaded On

Apr 15, 2021

Number of pages

27

Written in

Additional information

This document has been written for:

Uploaded

Apr 15, 2021

Downloads

0

Views

75

.png)

.png)

.png)

.png)

.png)

.png)

.png)