Computer Science > EXAM > Stanford University - CS 221exam-2014-solution ( all solutions are 100% correct ) (All)

Stanford University - CS 221exam-2014-solution ( all solutions are 100% correct )

Document Content and Description Below

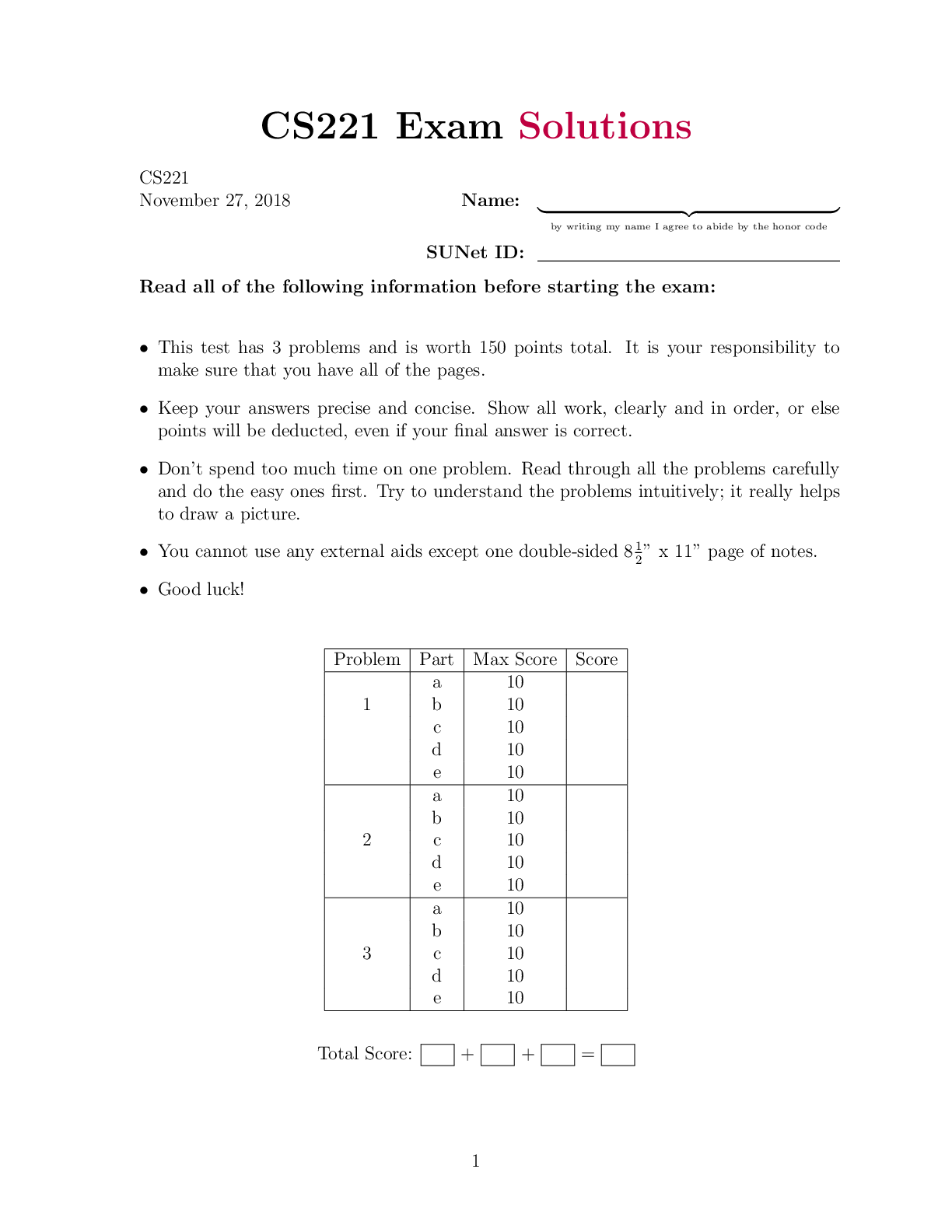

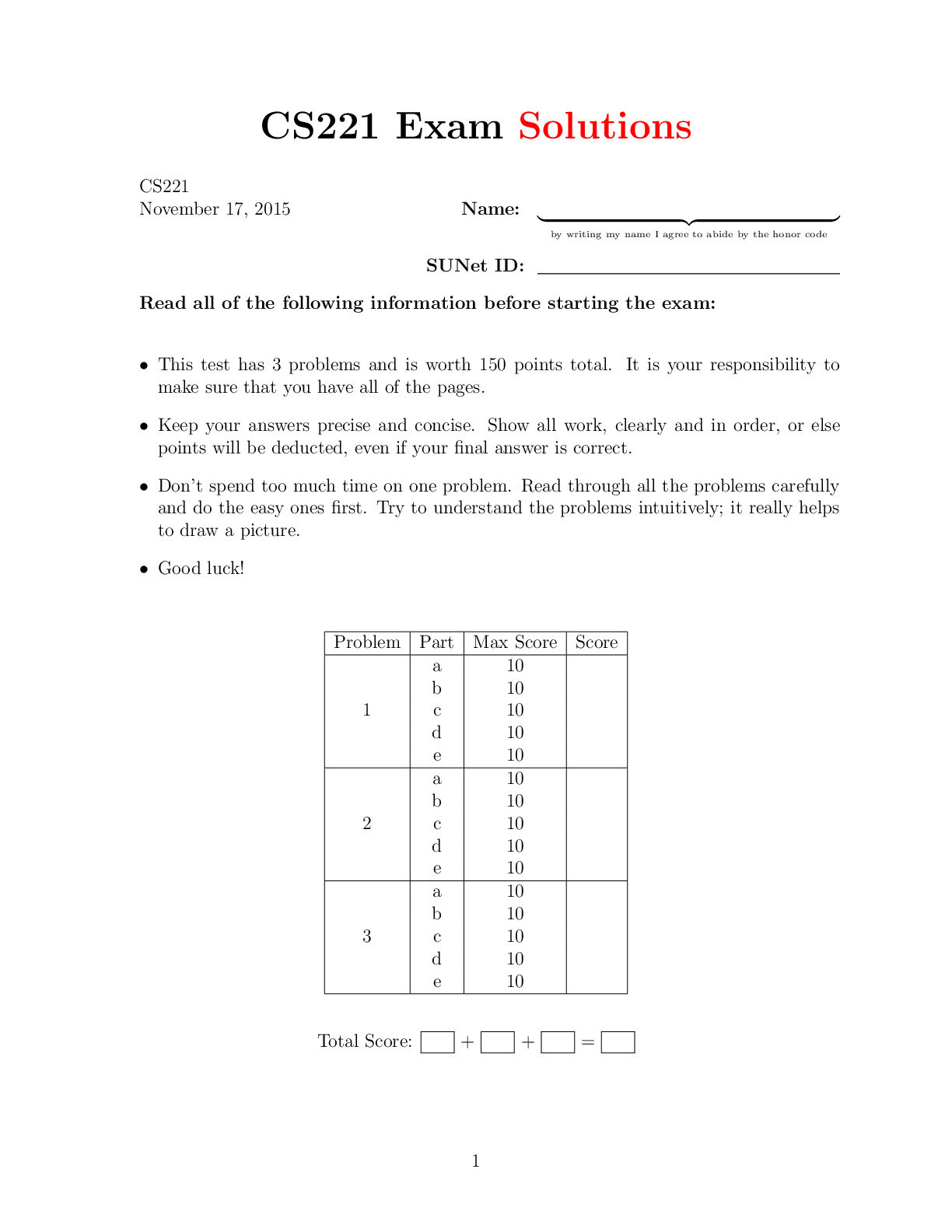

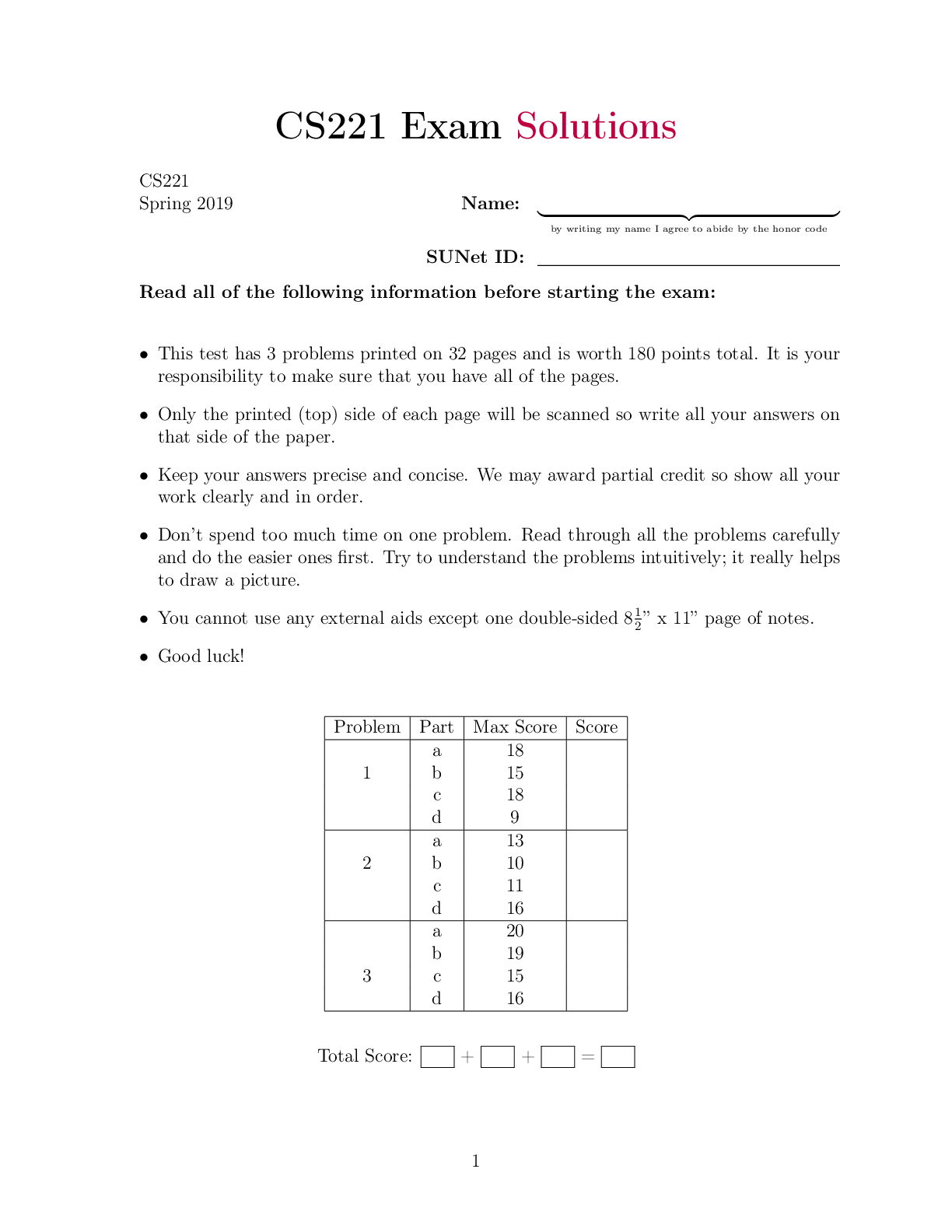

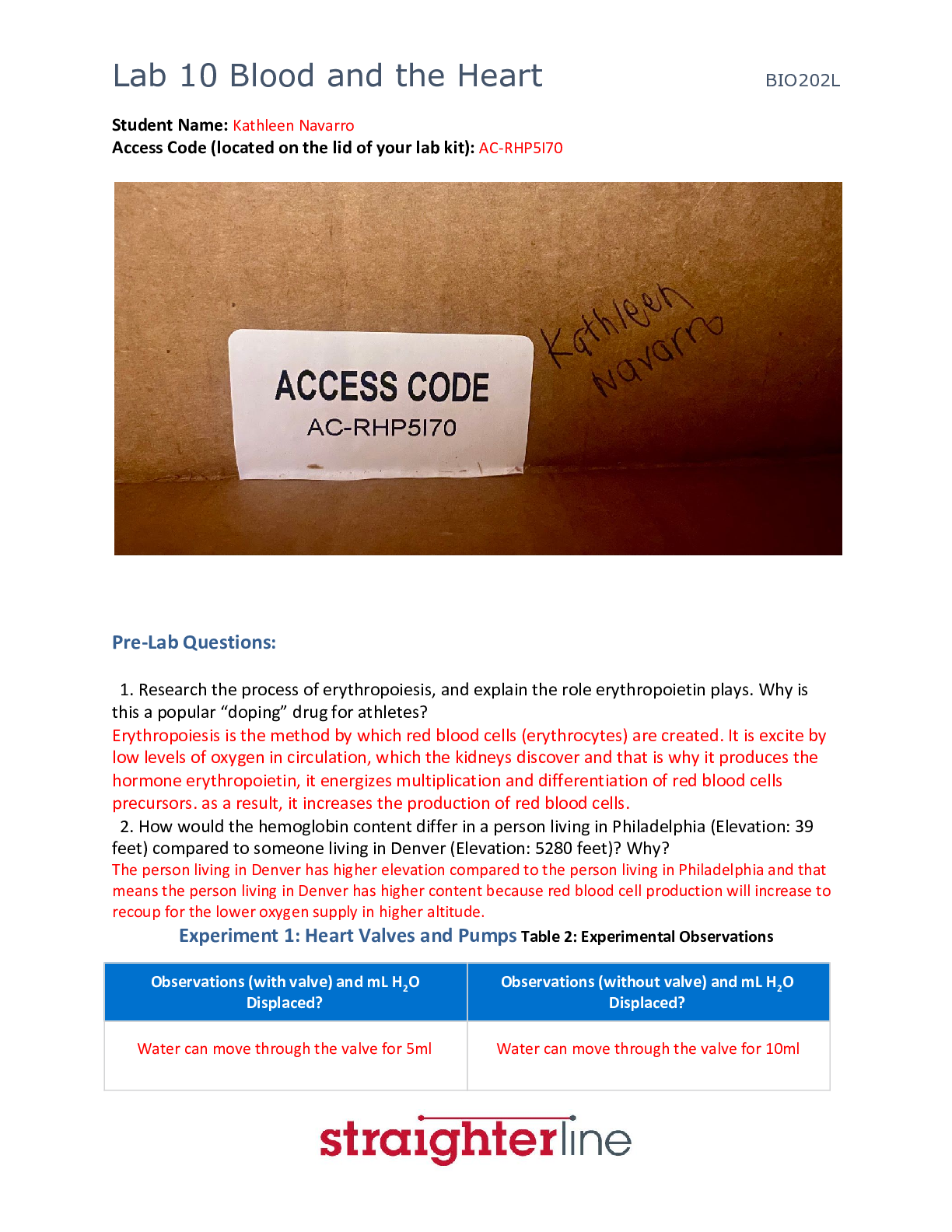

CS221 Midterm Solutions CS221 November 18, 2014 Name: | {z } by writing my name I agree to abide by the honor code SUNet ID: Read all of the following information before starting the exam: • ... This test has 3 problems and is worth 150 points total. It is your responsibility to make sure that you have all of the pages. • Keep your answers precise and concise. Show all work, clearly and in order, or else points will be deducted, even if your final answer is correct. • Don’t spend too much time on one problem. Read through all the problems carefully and do the easy ones first. Try to understand the problems intuitively; it really helps to draw a picture. • Good luck! Problem Part Max Score Score 1 a 10 b 10 c 10 d 10 e 10 2 a 10 b 10 c 10 d 10 e 10 3 a 10 b 10 c 10 d 10 e 10 Total Score: + + = 11. Enchaining Realm (50 points) This problem is about machine learning. a. (10 points) Suppose we want to predict a real-valued output y 2 R given an input x = (x1, x2) 2 R2, which is represented by a feature vector !(x) = (x1, |x1 " x2|). Consider the following training set of (x, y) pairs: Dtrain = {((1, 2), 2), ((1, 1), 1), ((2, 1), 3)}. (1) We use a modified squared loss function, which penalizes overshooting twice as much as undershooting: Loss(x, y, w) = ((1 2w(w· !· !(x(x) )""y)y2)2 if otherwise w · !(x) < y (2) Using a fixed learning rate of ⌘ = 1, apply the stochastic gradient descent algorithm on this training set starting from w = [0, 0] after looping through each example (x, y) in order and performing the following update: w w " ⌘ rwLoss(x, y, w). (3) For each example in the training set, calculate the loss on that example and update the weight vector w to fill in the table below: x !(x) Loss(x, y, w) rwLoss(x, y, w) weights w Initialization n/a n/a n/a n/a [0, 0] After example 1 (1,2) After example 2 (1,1) After example 3 (2,1) Solution x !(x) Loss(x, y, w) rwLoss(x, y, w) weights w Initialization n/a n/a n/a n/a [0, 0] After example 1 (1,2) (1,1) 2 ["2, "2] [2, 2] After example 2 (1,1) (1,0) 1 [2, 0] [0, 2] After example 3 (2,1) (2,1) 0.5 ["2, "1] [2, 3] [Show More]

Last updated: 1 year ago

Preview 1 out of 27 pages

Instant download

.png)

Buy this document to get the full access instantly

Instant Download Access after purchase

Add to cartInstant download

Reviews( 0 )

Document information

Connected school, study & course

About the document

Uploaded On

Apr 15, 2021

Number of pages

27

Written in

Additional information

This document has been written for:

Uploaded

Apr 15, 2021

Downloads

0

Views

27

.png)

.png)

.png)