Computer Science > SOLUTIONS MANUAL > Midterm Exam Solutions CMU 10-601: Machine Learning (Spring 2016) Carnegie Mellon UniversityCMU 10Mi (All)

Midterm Exam Solutions CMU 10-601: Machine Learning (Spring 2016) Carnegie Mellon UniversityCMU 10Midterm_solutions

Document Content and Description Below

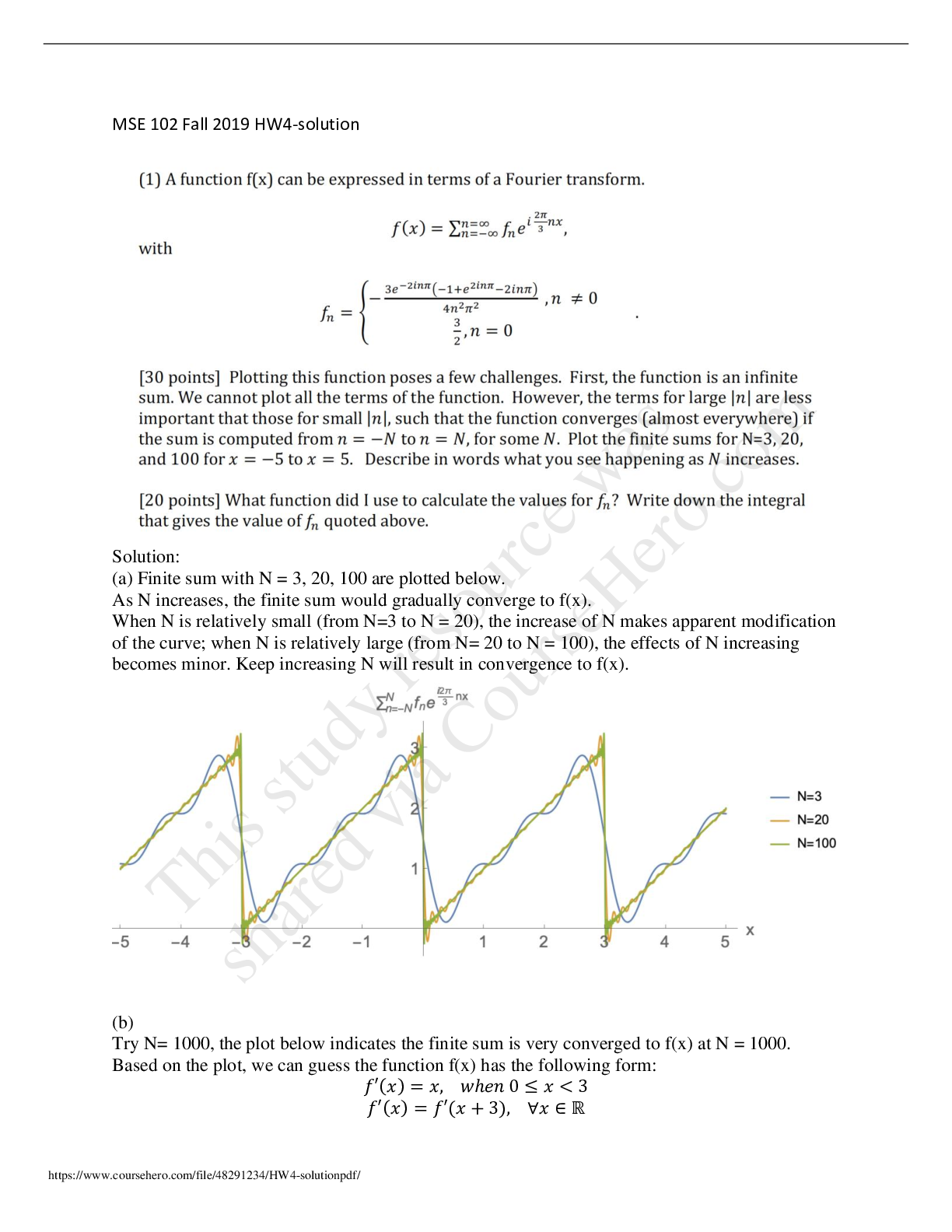

Midterm Exam Solutions CMU 10-601: Machine Learning (Spring 2016) Feb. 29, 2016 Name: Andrew ID: START HERE: Instructions • This exam has 17 pages and 5 Questions (page one is this cover page)... . Check to see if any pages are missing. Enter your name and Andrew ID above. • You are allowed to use one page of notes, front and back. • Electronic devices are not acceptable. • Note that the questions vary in difficulty. Make sure to look over the entire exam before you start and answer the easier questions first. Question Point Score 1 20 2 20 3 20 4 20 5 20 Extra Credit 14 Total 11410-601: Machine Learning Page 2 of 17 2/29/2016 1 Naive Bayes, Probability, and MLE [20 pts. + 2 Extra Credit] 1.1 Naive Bayes You are given a data set of 10,000 students with their sex, height, and hair color. You are trying to build a classifier to predict the sex of a student, so you randomly split the data into a training set and a testing set. Here are the specifications of the data set: • sex 2 fmale,femaleg • height 2 [0,300] centimeters • hair 2 fbrown, black, blond, red, greeng • 3240 men in the data set • 6760 women in the data set Under the assumptions necessary for Naive Bayes (not the distributional assumptions you might naturally or intuitively make about the dataset) answer each question with T or F and provide a one sentence explanation of your answer: (a) [2 pts.] T or F: As height is a continuous valued variable, Naive Bayes is not appropriate since it cannot handle continuous valued variables. Solution: False. Naive Bayes can handle both continuous and discrete values as long as the appropriate distributions are used for conditional probabilities. For example, Gaussian for continuous and Bernoulli for discrete (b) [2 pts.] T or F: Since there is not a similar number of men and women in the dataset, Naive Bayes will have high test error. Solution: False. Since the data was randomly split, the same proportion of male and female will be in the training and testing sets. Thus this discrepancy will not affect testing error. (c) [2 pts.] T or F: P(heightjsex; hair) = P(heightjsex). Solution: True. This results from the conditional independence assumption required for Naive Bayes. (d) [2 pts.] T or F: P(height; hairjsex) = P(heightjsex)P(hairjsex). Solution: True. This results from the conditional independence assumption required for Naive Bayes. 1.2 Maximum Likelihood Estimation (MLE) Assume we have a random sample that is Bernoulli distributed X1; : : : ; Xn ∼ Bernoulli(θ). We are going to derive the MLE for θ. Recall that a Bernoulli random variable X takes values in f0; 1g and has probability mass function given by P(X; θ) = θX(1 − θ)1−X: [Show More]

Last updated: 1 year ago

Preview 1 out of 17 pages

Instant download

.png)

Buy this document to get the full access instantly

Instant Download Access after purchase

Add to cartInstant download

Reviews( 0 )

Document information

Connected school, study & course

About the document

Uploaded On

Jun 15, 2021

Number of pages

17

Written in

Additional information

This document has been written for:

Uploaded

Jun 15, 2021

Downloads

0

Views

37