Biological Psychology > QUESTIONS & ANSWERS > LS 40 (Life Science) Homework 6 Solutions (Lecture 1 W21)updated in Jan 2021 (All)

LS 40 (Life Science) Homework 6 Solutions (Lecture 1 W21)updated in Jan 2021

Document Content and Description Below

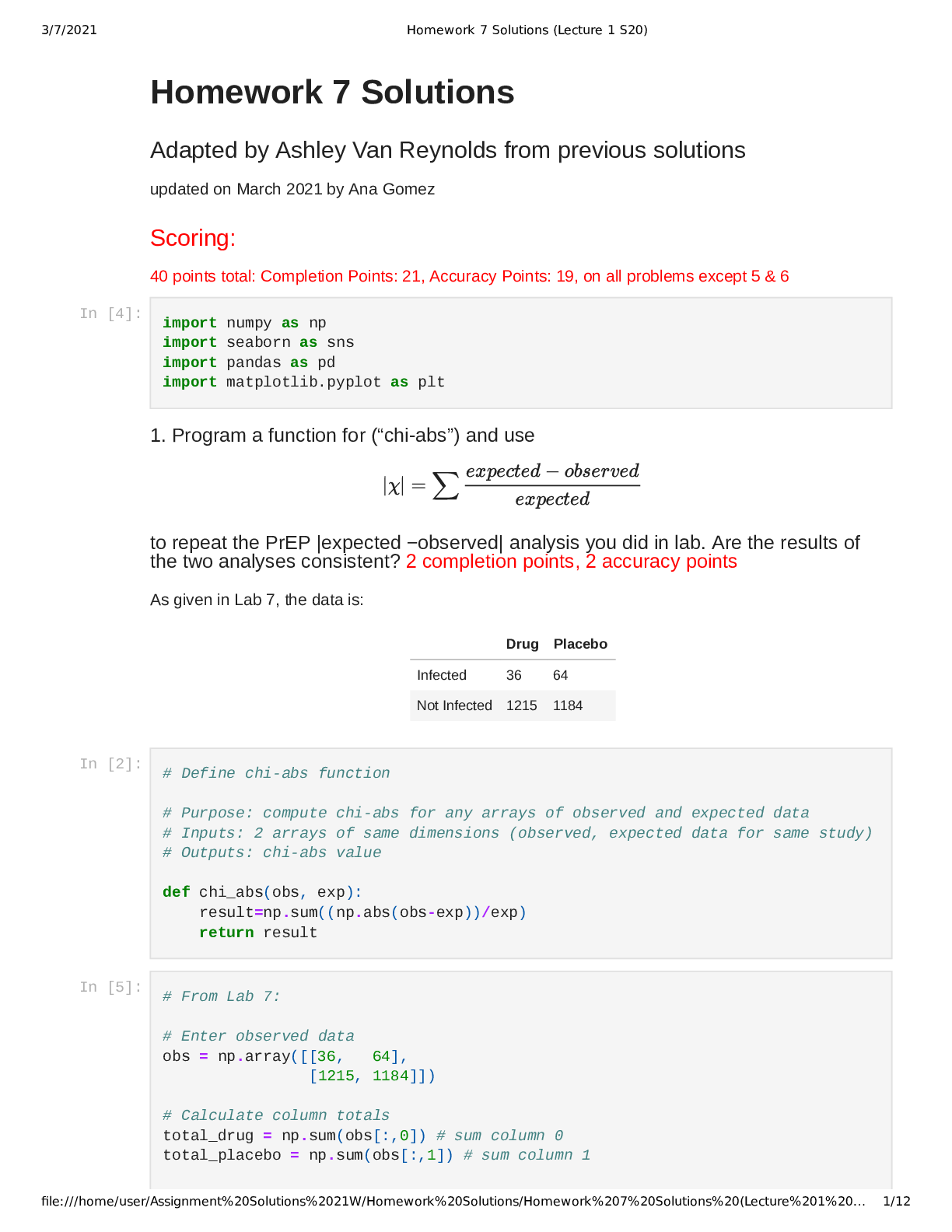

Homework 6 Solutions By Ashley Van Reynolds, adapted from previous solutions Completion Points: 27, Accuracy Points: 13 ,all problems 1. Starting in Lab 4, why did we suggest using np.zeros to stor... e the results of your simulations instead of just appending? Hint: this is actually a really important technical point for doing simulations! (2 completion point, 1 accuracy point) If you know the length of your list in advance, it's faster and more efficient for the computer to preallocate enough space for all of the results and then fill them in versus having to make room in the memory again and again for each append. Another smaller advantage is that pre-allocating protects you from accidentally appending objects of a different size, which is a common mistake when dealing with certain functions that output more than one value. Also, we need to use np.arrays to use many of the calculations we do. For example, to calculate Flike and , we needed to multiply, add, subtract, or divide column Aside: Python tries to smartly amoritize adding additional space though, so if you don't know the length of the list ahead of time (logging data on a server, for instance) it may be better to just append to the default list object and convert it to an array afterwards for Numpy's additional processing speed. 2. A graduate student tells you that he has statistically compared 10 different popular “fad” diets, and found that 2 of them showed statistically significant weight loss after 1 week, each with a p-value <0.01. Incredulous, you ask, “but did you correct for multiple testing?” The graduate student did not, but explains that he doesn’t understand why that’s important, retorting, “if I do the science right, then results are results.” What would you say to convince him of the importance of correcting for multiple testing? (3 completion points, 2 accuracy points) This response is pretty open ended, but a couple of possibilities for responses might be something along the following: *An example: "Suppose that I told you I'd give you a million dollars if you roll a 20 on a twenty sided die at least once. Are you more likely to win if you roll the die once or ten times?" *An explanation: "I would explain to the person that the more you conduct a test, the more likely it is that you could get a false positive because a p-value of 0.01 just indicates that there is a 1/100 In [1]: %matplotlib inline import matplotlib as mat #import matplotlib import seaborn as sns #import seaborn import numpy as np #import numpy import pandas as pd import matplotlib.pyplot as plt |χ|/χ 2 3/9/2021 Homework 6 Solutions (Lecture 1 S20) file:///home/user/Assignment%20Solutions%2021W/Homework%20Solutions/Homework%206%20Solutions%20(Lecture%201%20… 2/13 chance that this result is due to random chance. A 1/100 is still a chance, and they are more likely to accidentally hit that chance the more they repeat the test." 3. Which is more “conservative,” meaning it will produce the results with the fewest “significant” findings: the Benjamini-Hochberg correction or the Bonferroni correction? Describe the advantages and disadvantages of using each. (3 completion points, 2 accuracy points) The Bonferroni correction is more conservative. Bonferroni correction divides the original alpha cut off-value by the number of pairwise tests. The main disadvantage is that this is a very strict test which results in a combined false positive rate (family-wise error rate) much less than the original alpha cut-off and is more likely to cause false negatives. The main advantages are that the false positive rate will be very low and this is a fairly simple correction to do and explain. To prevent this lower false positive rate and high false negative rate, we use the BenjaminiHochberg method. This test involves sorting the p-values, then multiplying each ordered p value by its index divided by the total number of pairwise tests ( ). Then, you find the largest ordered p value such that it's less than , whose index we call . We can then reject the null hypothesis for the comparisons corresponding to the p-values whose indexes are less than or equal to . Using the Benjamini-Hochberg method can allow us to correct for multiple-testing, but should not cause as many false negatives. The main disadvantage is that this test is quite complicated and thus difficult to explain and apply. The main advantage is the chosen alpha cut-off is used, with the corresponding rates of false positives and false negatives. NOTE: We explain the two methods some here for your benefit, which is not required. [Show More]

Last updated: 1 year ago

Preview 1 out of 13 pages

Instant download

Buy this document to get the full access instantly

Instant Download Access after purchase

Add to cartInstant download

Reviews( 0 )

Document information

Connected school, study & course

About the document

Uploaded On

Jul 09, 2021

Number of pages

13

Written in

Additional information

This document has been written for:

Uploaded

Jul 09, 2021

Downloads

0

Views

38

(1).png)