Health Care > EXAM > CS 234 assignment 2-ALL ANSWERS 100% CORRECT (All)

CS 234 assignment 2-ALL ANSWERS 100% CORRECT

Document Content and Description Below

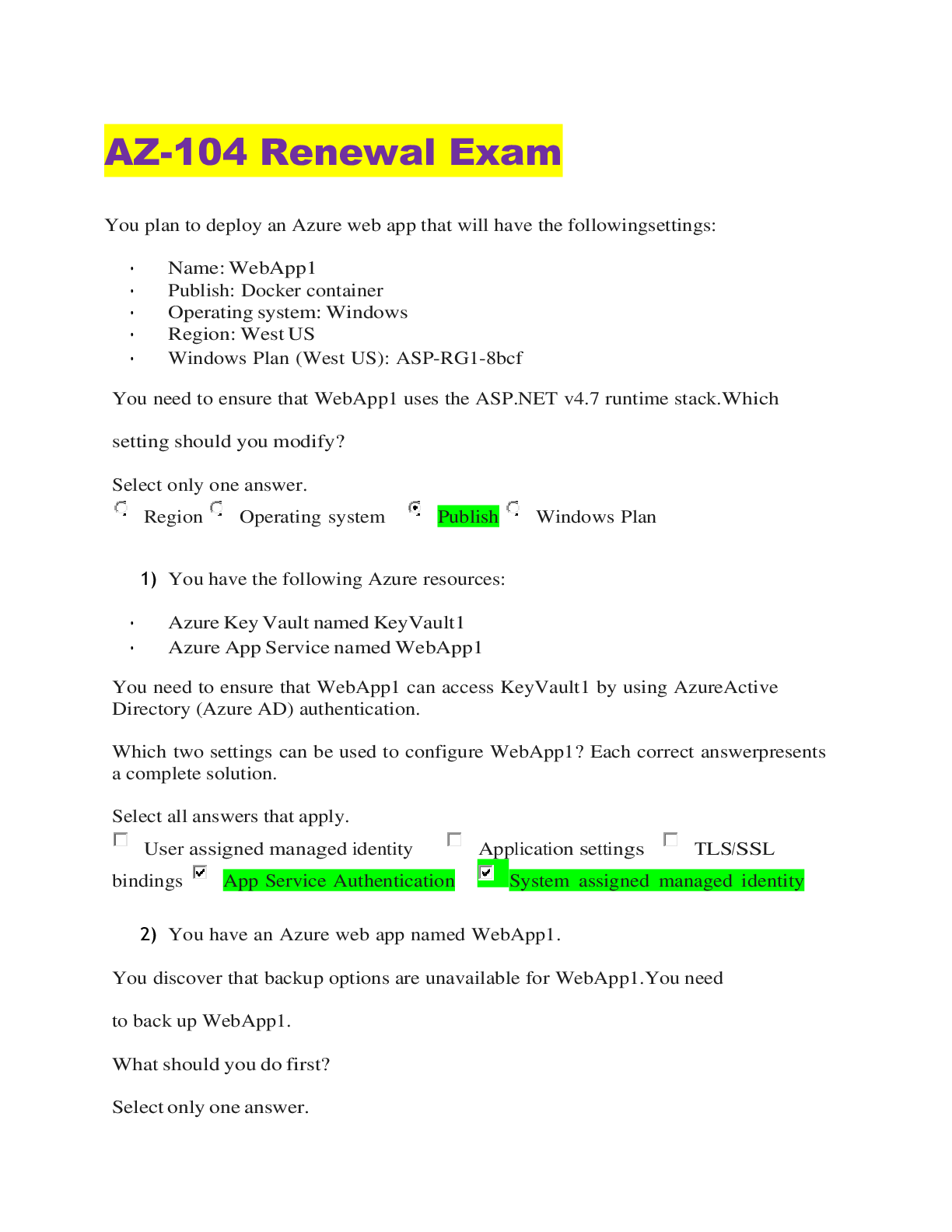

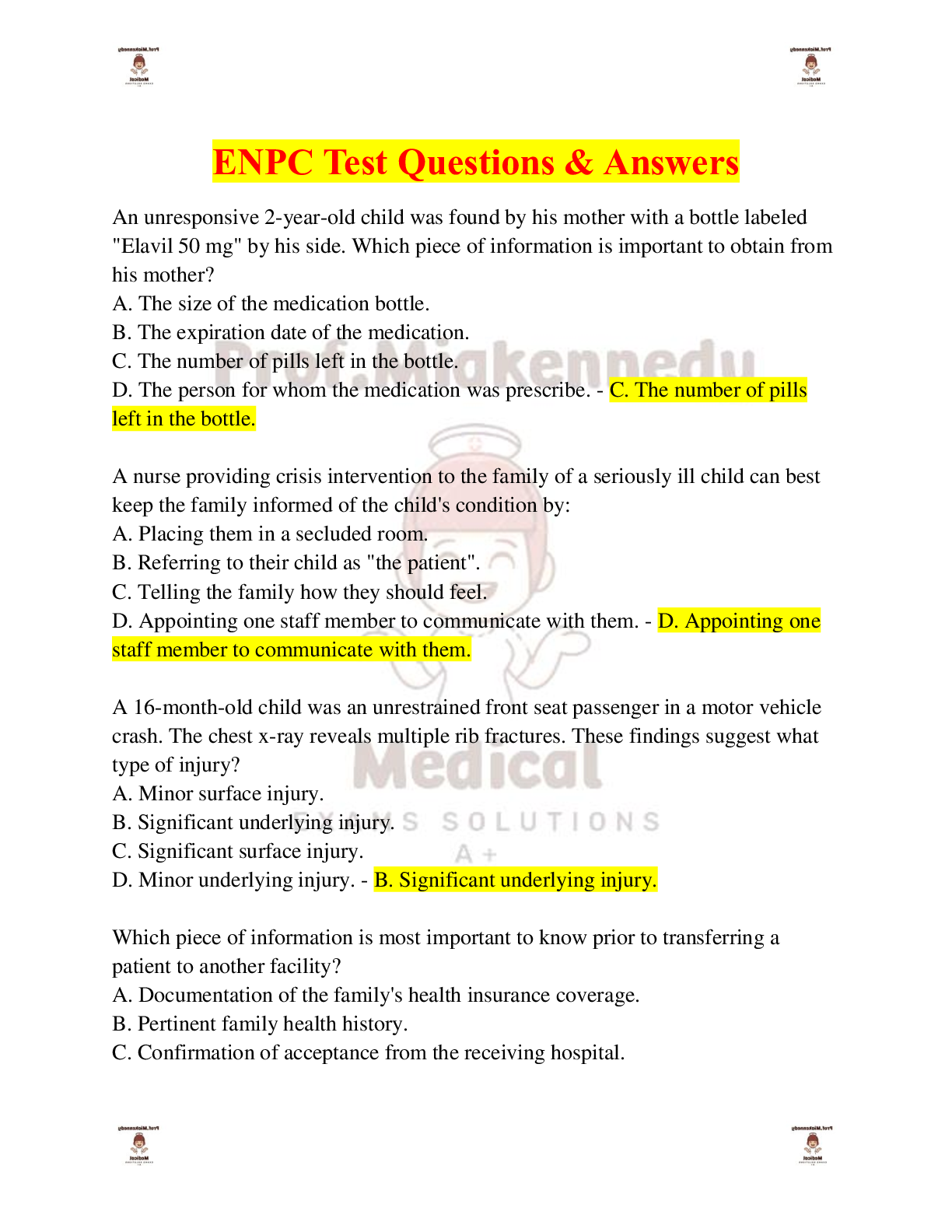

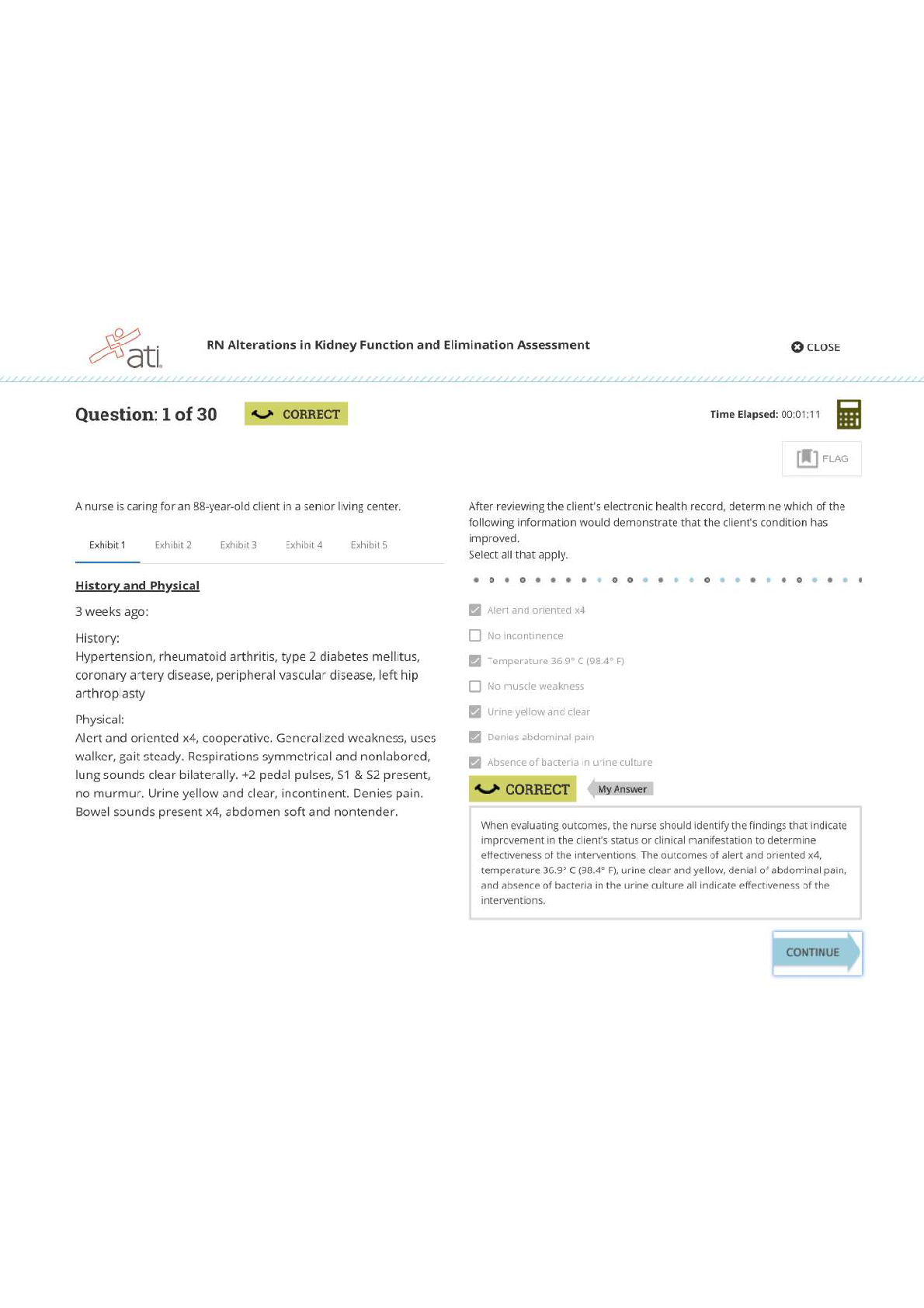

CS 234 Winter 2020: Assignment #2 Introduction In this assignment we will implement deep Q-learning, following DeepMind’s paper ([mnih2015human] and [mnih-atari-2013]) that learns to play At... ari games from raw pixels. The purpose is to demonstrate the effectiveness of deep neural networks as well as some of the techniques used in practice to stabilize training and achieve better performance. In the process, you’ll become familiar with TensorFlow. We will train our networks on the Pong-v0 environment from OpenAI gym, but the code can easily be applied to any other environment. In Pong, one player scores if the ball passes by the other player. An episode is over when one of the players reaches 21 points. Thus, the total return of an episode is between −21 (lost every point) and +21 (won every point). Our agent plays against a decent hard-coded AI player. Average human performance is −3 (reported in [mnih-atari-2013]). In this assignment, you will train an AI agent with super-human performance, reaching at least +10 (hopefully more!). 1 0 Test Environment (6 pts) Before running our code on Pong, it is crucial to test our code on a test environment. In this problem, you will reason about optimality in the provided test environment by hand; later, to sanity-check your code, you will verify that your implementation is able to achieve this optimality. You should be able to run your models on CPU in no more than a few minutes on the following environment: • 4 states: 0, 1, 2, 3 • 5 actions: 0, 1, 2, 3, 4. Action 0 ≤ i ≤ 3 goes to state i, while action 4 makes the agent stay in the same state. • Rewards: Going to state i from states 0, 1, and 3 gives a reward R(i), where R(0) = 0.1, R(1) = −0.2, R(2) = 0, R(3) = −0.1. If we start in state 2, then the rewards defind above are multiplied by −10. See Table 1 for the full transition and reward structure. • One episode lasts 5 time steps (for a total of 5 actions) and always starts in state 0 (no rewards at the initial state). State (s) Action (a) Next State (s′) Reward (R) 0 0 0 0.1 0 1 1 -0.2 0 2 2 0.0 0 3 3 -0.1 0 4 0 0.1 1 0 0 0.1 1 1 1 -0.2 1 2 2 0.0 1 3 3 -0.1 1 4 1 -0.2 2 0 0 -1.0 2 1 1 2.0 2 2 2 0.0 2 3 3 1.0 2 4 2 0.0 3 0 0 0.1 3 1 1 -0.2 3 2 2 0.0 3 3 3 -0.1 3 4 3 -0.1 Table 1: Transition table for the Test Environment An example of a trajectory (or episode) in the test environment is shown in Figure 5, and the trajectory can be represented in terms of st, at, Rt as: s0 = 0, a0 = 1, R0 = −0.2, s1 = 1, a1 = 2, R1 = 0, s2 = 2, a2 = 4, R2 = 0, s3 = 2, a3 = 3, R3 = (−0.1) • (−10) = 1, s4 = 3, a4 = 0, R4 = 0.1, s5 = 0. Figure 1: Example of a trajectory in the Test Environment 1. (written 6 pts) What is the maximum sum of rewards that can be achieved in a single trajectory in the test environment, assuming γ = 1? Show first that this value is attainable in a single trajectory, and then briefly argue why no other trajectory can achieve greater cumulative reward. Solution: The optimal reward of the Test environment is 4.1 To prove this, let’s prove an upper bound of 4.1 with 3 key observations • first, the maximum reward we can achieve is 2 when we do 2 → 1. • second, after having performed this optimal transition, we have to wait at least one step to execute it again. As we have 5 steps, we can execute 2 optimal moves. Executing less than 2 would yield a strictly smaller result. We need to go to 2 twice, which gives us 0 reward on 2 steps. Thus, we know that 4 steps gives us a max of 4. Then, the best reward we can achieve that is not an optimal move (starting from state 1) is 0.1, which yields an upper bound of 4.1. Considering the path 0 → 2 → 1 → 2 → 1 → 0 proves that we can achieve this upper bound. 1 Q-Learning (24 pts) Tabular setting If the state and action spaces are sufficiently small, we can simply maintain a table containing the value of Q(s, a) – an estimate of Q∗(s, a) – for every (s, a) pair. In this tabular setting, given an experience sample (s, a, r, s′), the update rule is Q(s, a) Q(s, a) + α r + γ max Q (s′, a′) Q (s, a) (1) a'∈A where α > 0 is the learning rate, γ ∈ [0, 1) the discount factor. Approximation setting Due to the scale of Atari environments, we cannot reasonably learn and store a Q value for each state-action tuple. We will instead represent our Q values as a function qˆ(s, a; w) where w are parameters of [Show More]

Last updated: 1 year ago

Preview 1 out of 13 pages

Reviews( 0 )

Document information

Connected school, study & course

About the document

Uploaded On

Dec 03, 2022

Number of pages

13

Written in

Additional information

This document has been written for:

Uploaded

Dec 03, 2022

Downloads

0

Views

57

.png)

.png)