Mathematics > QUESTIONS & ANSWERS > Exam Review > Georgia Institute Of Technology CS 7641 CSE/ISYE 6740 2015 Final Exam Sample Question (All)

Exam Review > Georgia Institute Of Technology CS 7641 CSE/ISYE 6740 2015 Final Exam Sample Question

Document Content and Description Below

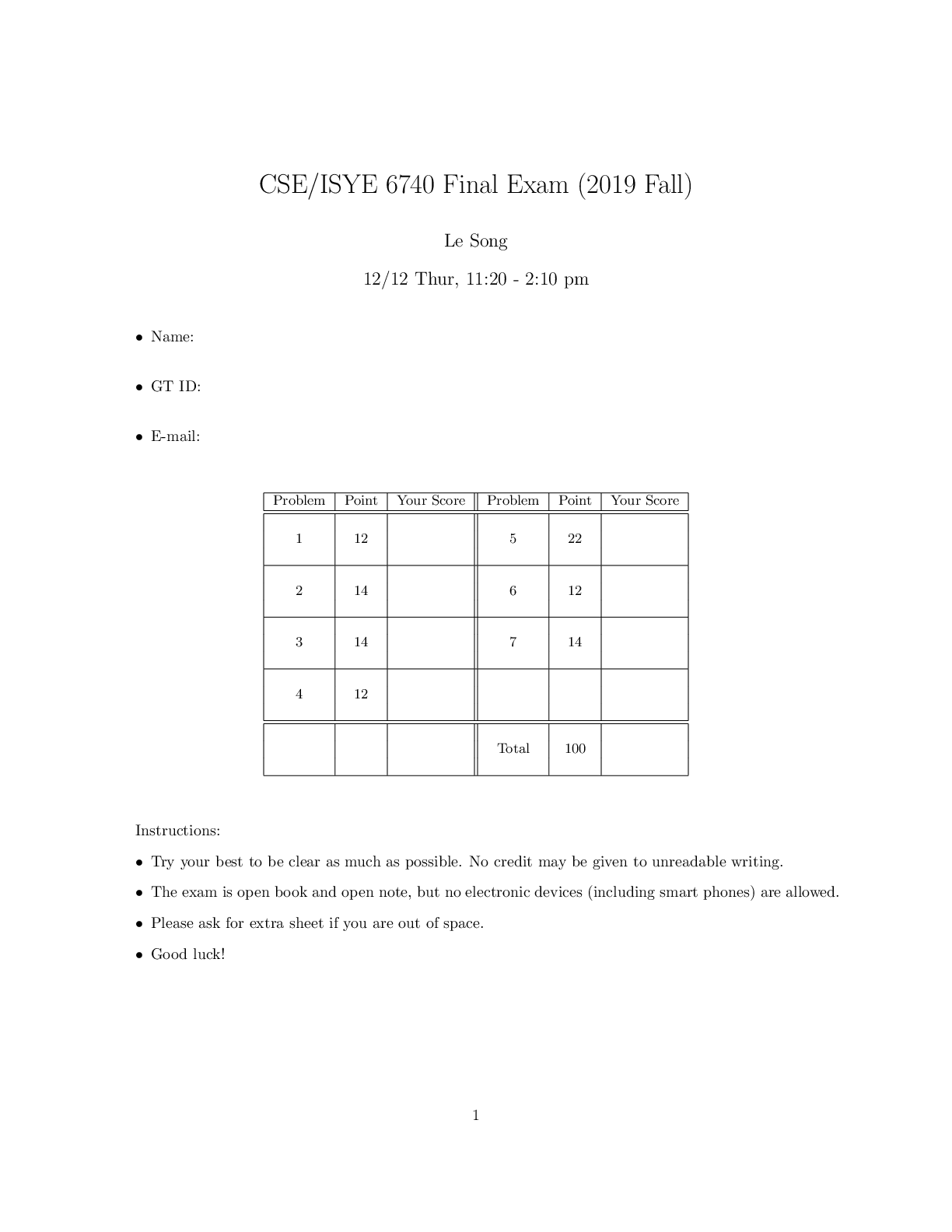

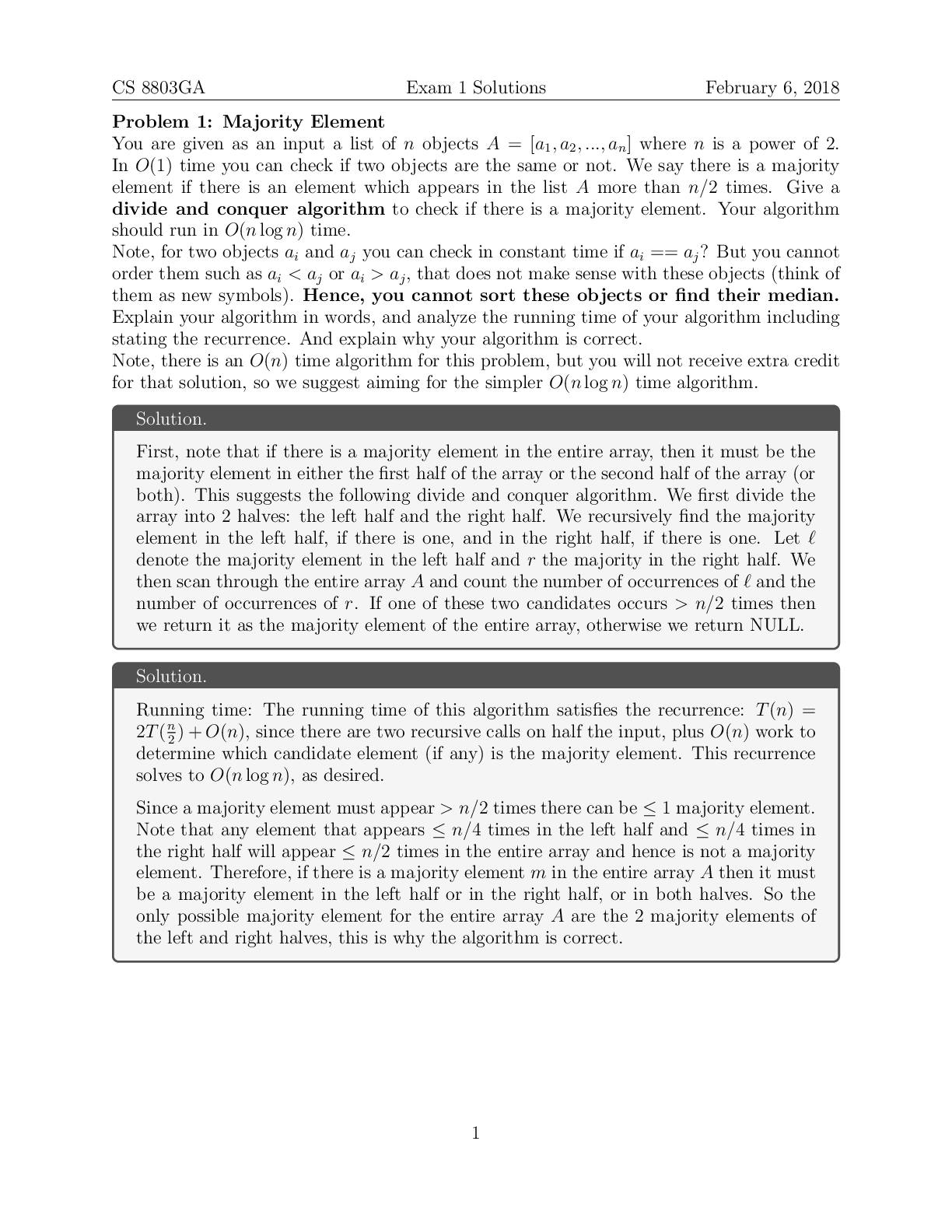

CS 7641 CSE/ISYE 6740 2015 Final Exam Sample Question Le Song 12/7 Mon 1 Maximum Likelihood [10 pts] (a) Pareto Distribution [5 pts] The Pareto distribution has been used in economics as a model... for a density function with a slowly decaying tail: f(xjx0; θ) = θxθ 0x-θ-1; x ≥ x0; θ > 1 Assume that x0 > 0 is given and that X1; X2; · · · ; Xn is an i.i.d. sample. Find the maximum likelihood estimator of θ. l(θ) = n log θ + nθ log x0 - (θ + 1) nXi =1 log Xi Let the derivative with respect to θ be zero, we have θ^ = 1 log X - log x0 (b) Dependent Noise Model [5 pts] Let X1; X2 be 2 determinations of a physical constant θ. Consider the model, Xi = θ + ei; i = 1; 2 and assume ei = αei-1 + i; i = 1; 2; e0 = 0 with i is i.i.d standard normal, and α is a known constant. What is the maximum likelihood estimate of θ? 1 2 Boosting [20 pts] In this problem, we test your understanding of AdaBoost algorithm with a simple binary classification example. We are given 10 data points, belonging to either the square class or the circle class. The following figures show the decision boundary of three weak learners (h1; h2, and h3). Suppose we boost with these weak learners in that order. In the figure, the darkened region means it is classified as square class, while white region indicates it is classified as circle class by the corresponding weak learner. (a) When we learn the second weak learner h2, list data points which receives higher weights than others. [4 pts] Answer: 6, 7 (b) When we learn the third weak learner h3, list data points which receive smallest weights. [4 pts] Answer: 3, 4, 5, 8 (c) What are i for i = 1; 2; 3? [4 pts] Answer: 0.2, 0.4, 0.2 (d) Draw the final decision boundary in the figure below. [4 pts] (e) What is the classification error with train data of the final classifier? [4 pts] Answer: 0 3 3 Model Selection [20 pts] We learned about bias-variance decomposition and model selection in the class. It basically deals with the problem of choosing a model complexity. In each sub-questions, we show a pair of two candidate models for various machine learning problems. Mark B on the one with less bias, and mark V on the other. You are not required to explain why. For example, • Model 1: A model with less bias { ( B ) • Model 2: A model with less variance { ( V ) (a) [3 pts] • Model 1: A flexible model with many parameters { ( ) • Model 2: A rigid model with a few parameters { ( ) (b) [3 pts] • Model 1: Ridge regression with large regularization coefficient λ { ( ) • Model 2: Unregularized linear regression { ( ) Answer: V B (c) [3 pts] • Model 1: Polynomial regression model with higher degree { ( ) • Model 2: Polynomial regression model with lower degree { ( ) | (d) [3 pts] For a logistic regression p(y = 1jx; θ) = | 1+expf- 1 θ>xg , ) | • Model 1: Logistic regression with large θ { ( | • Model 2: Logistic regression with small θ { ( ) Answer: B V (e) [4 pts] • Model 1: K-nearest Neighbor with large K { ( ) • Model 2: K-nearest Neighbor with small K { ( ) Answer: VB (f) [4 pts] • Model 1: A classifier with irregular decision boundary { ( ) • Model 2: A classifier with smooth decision boundary { ( ) 4 4 Graphical Models [10 pts] Consider the following bayesian network with six variables - (a) Factorize the joint probability distribution in terms of conditional probabilities based on chain rule. [3 pts] Answer: P(A)P(B)P(CjA; B)P(DjB)P(FjD)P(EjC) (b) Which of the following independence properties always hold in this model? [3 pts] • (A ? BjC) • (C ? DjB) • (E ? AjC) • (F ? E) (c) If we change the network to a markov network, will the factorization of the joint probability change? [2 pts] (d) If we change the network to a markov network, will the independence properties change? [2 pts] 5 5 Support Vector Machine [20 pts] Suppose we have a dataset in 1 - d space which consists of 3 data points f-1; 0; 1g with the positive label and 3 data points f-3; -2; 2g with the negative label. (a) Find a feature map (R1 ! R2), which will map the original 1 - d data points to the 2 - d feature space so that the positive samples and the negative samples are linearly separable with each other. Draw the dataset after mapping in the 2 - d space. [10 pts] (b) In your plot, draw the decision boundary given by hard-margin linear SVM. Mark the corresponding support vectors. [5 pts] (c) For the feature map you use, what is the corresponding kernel K(x1; x2)? [5 pts] 6 [Show More]

Last updated: 1 year ago

Preview 1 out of 6 pages

Reviews( 0 )

Document information

Connected school, study & course

About the document

Uploaded On

May 13, 2022

Number of pages

6

Written in

Additional information

This document has been written for:

Uploaded

May 13, 2022

Downloads

0

Views

37