Health Care > EXAM > CS 234 Winter 2020 Assignment 1 Due: January 22 at 11:59 pm (All)

CS 234 Winter 2020 Assignment 1 Due: January 22 at 11:59 pm

Document Content and Description Below

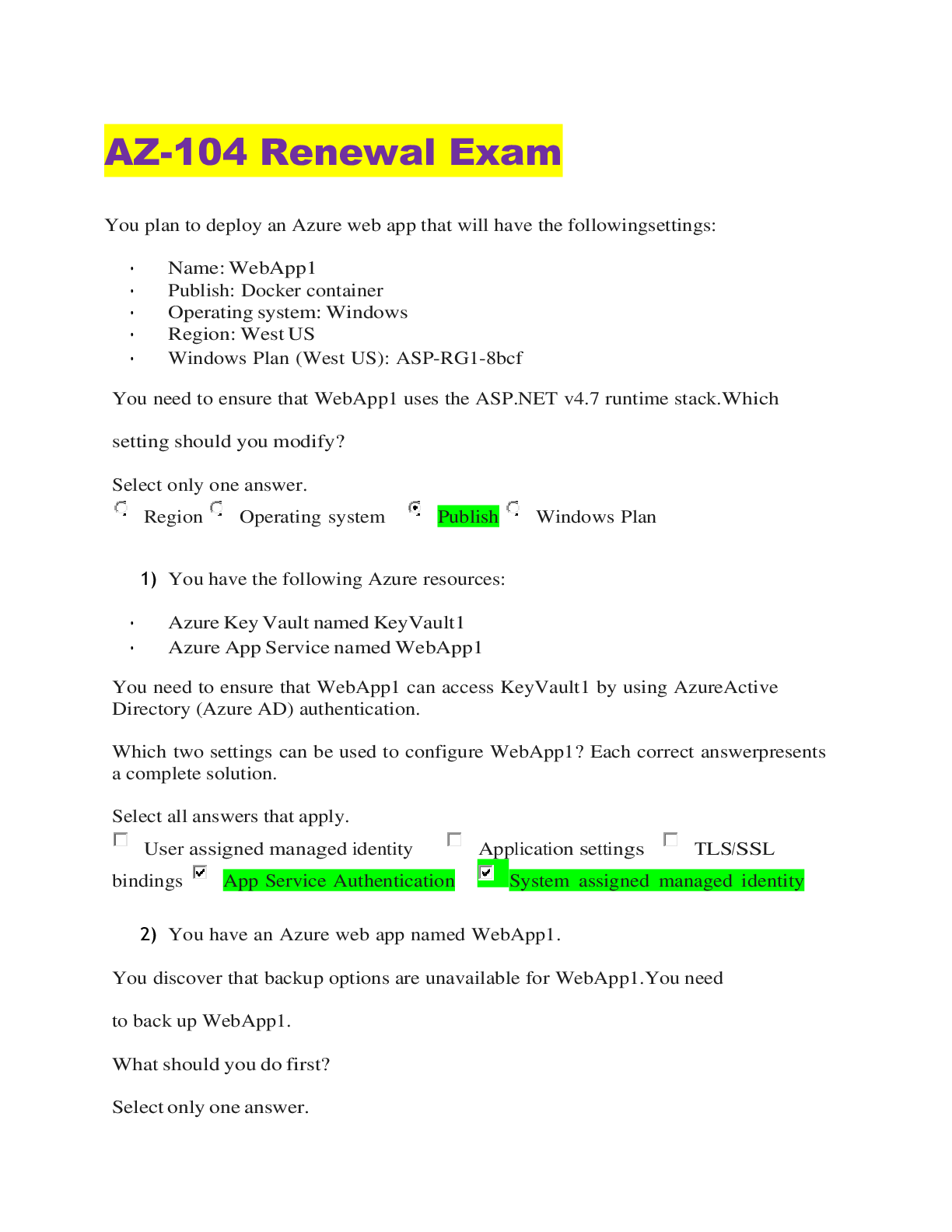

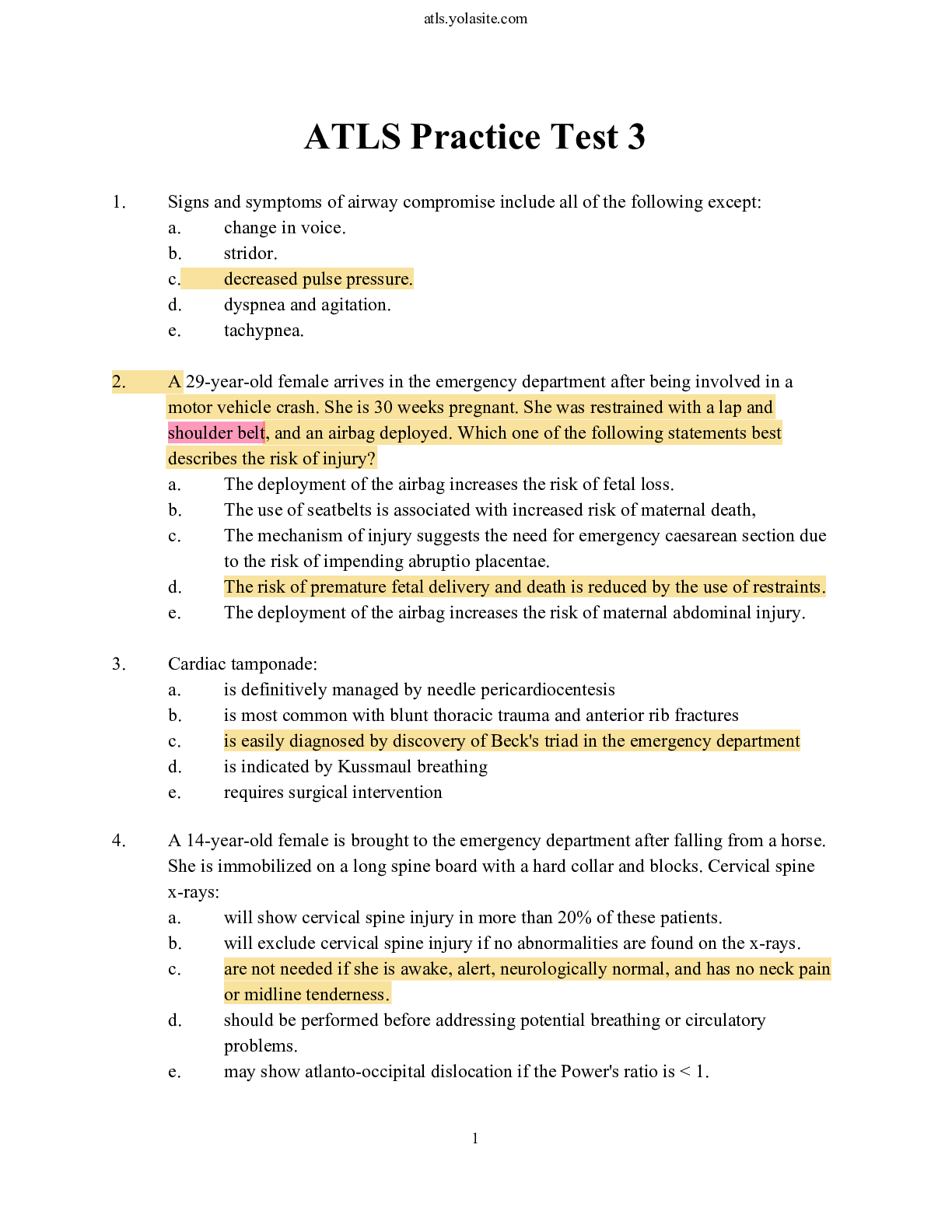

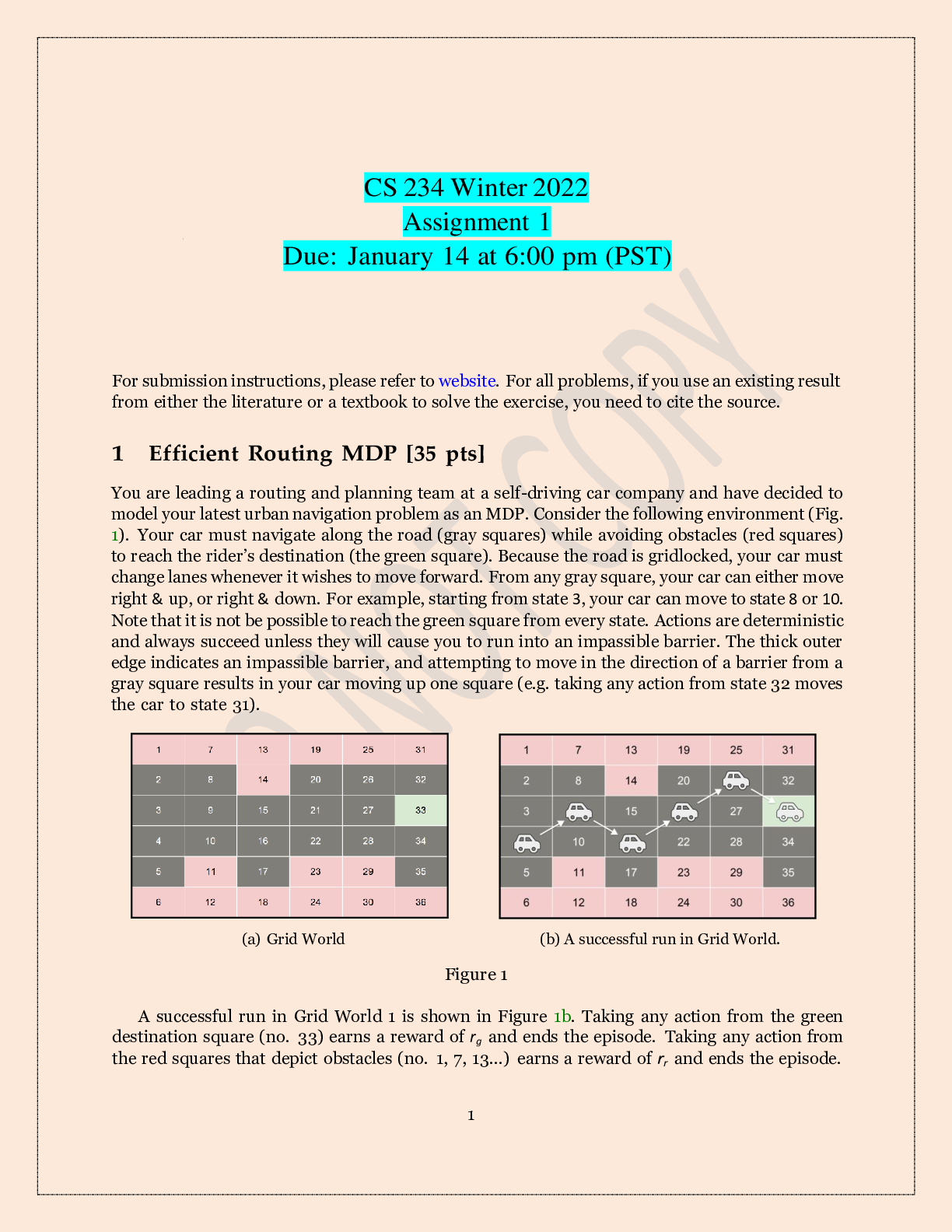

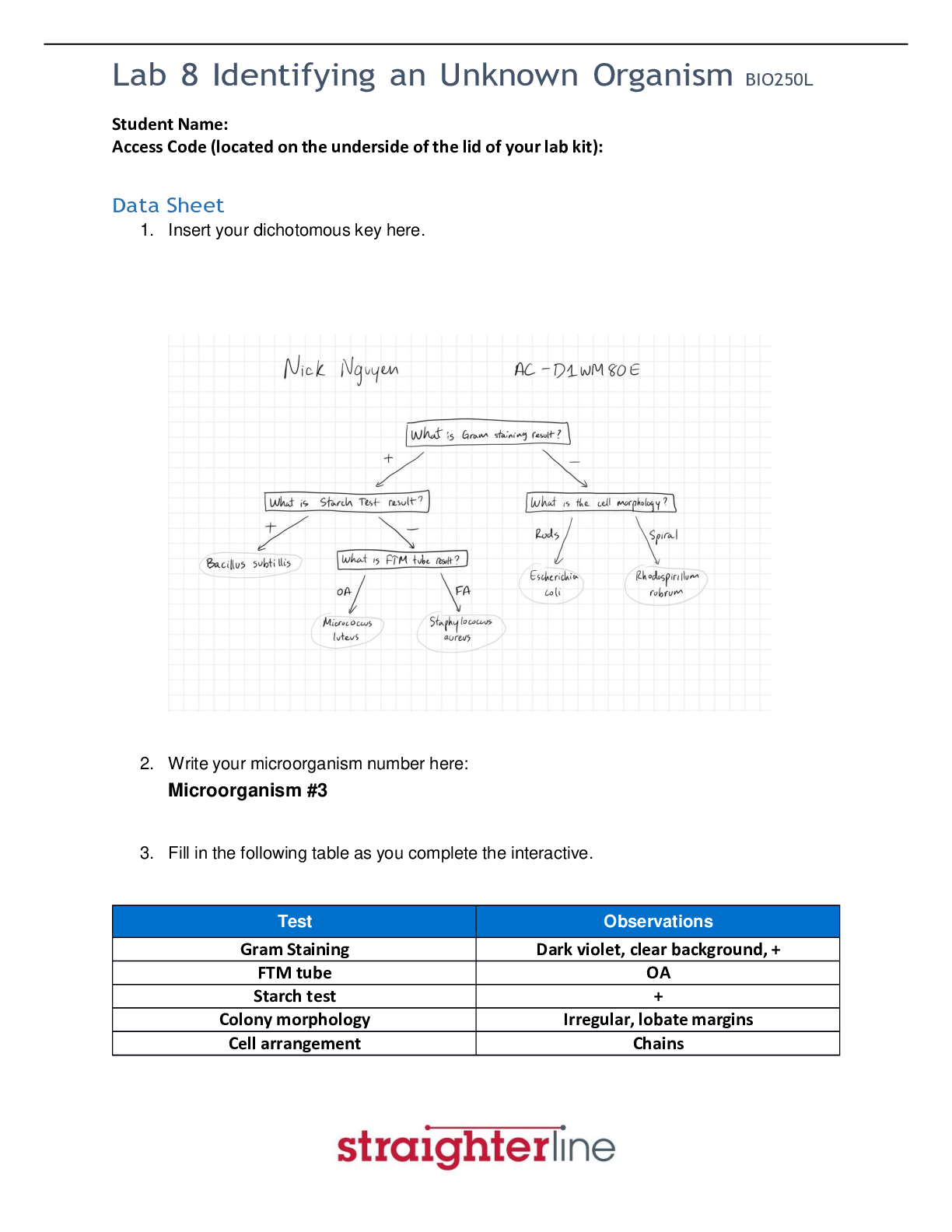

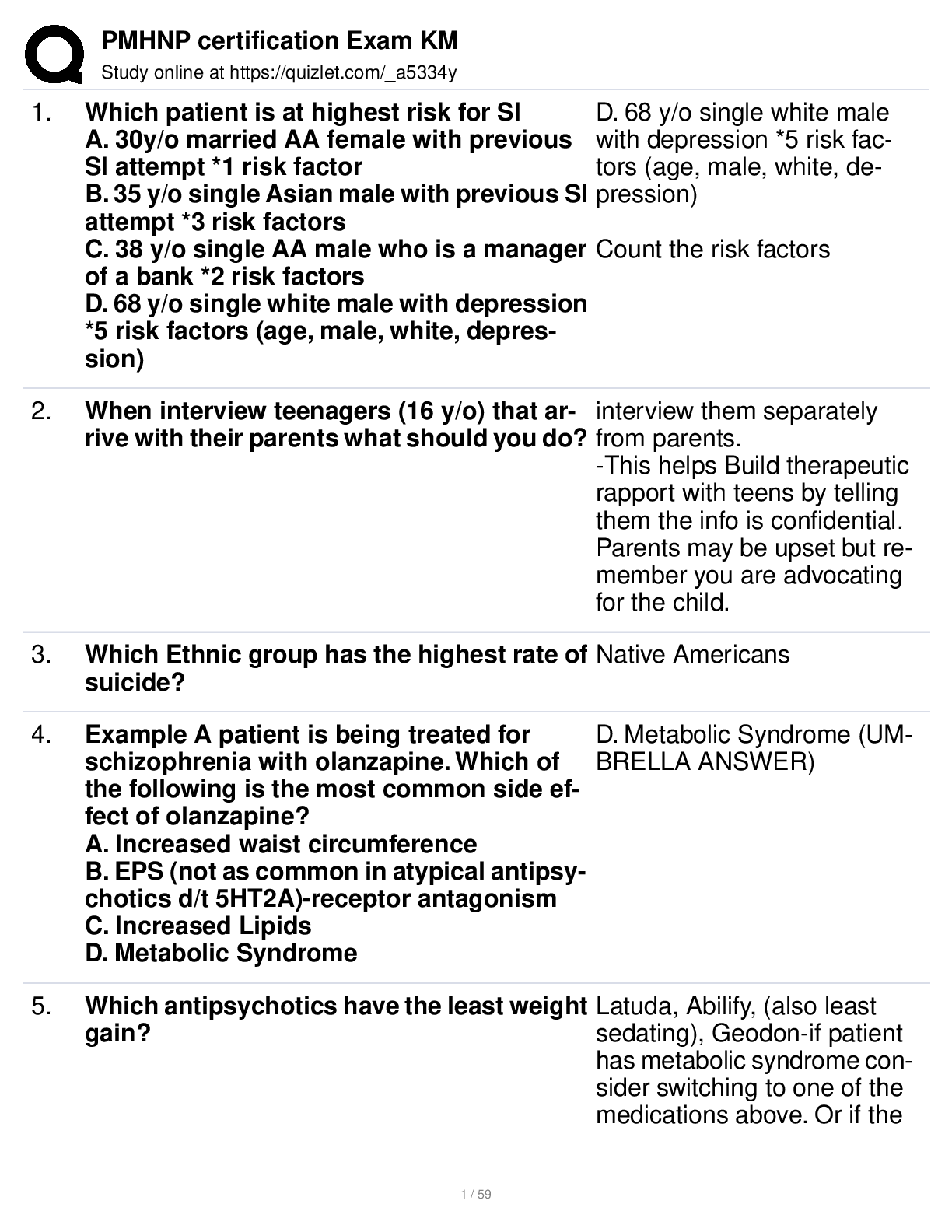

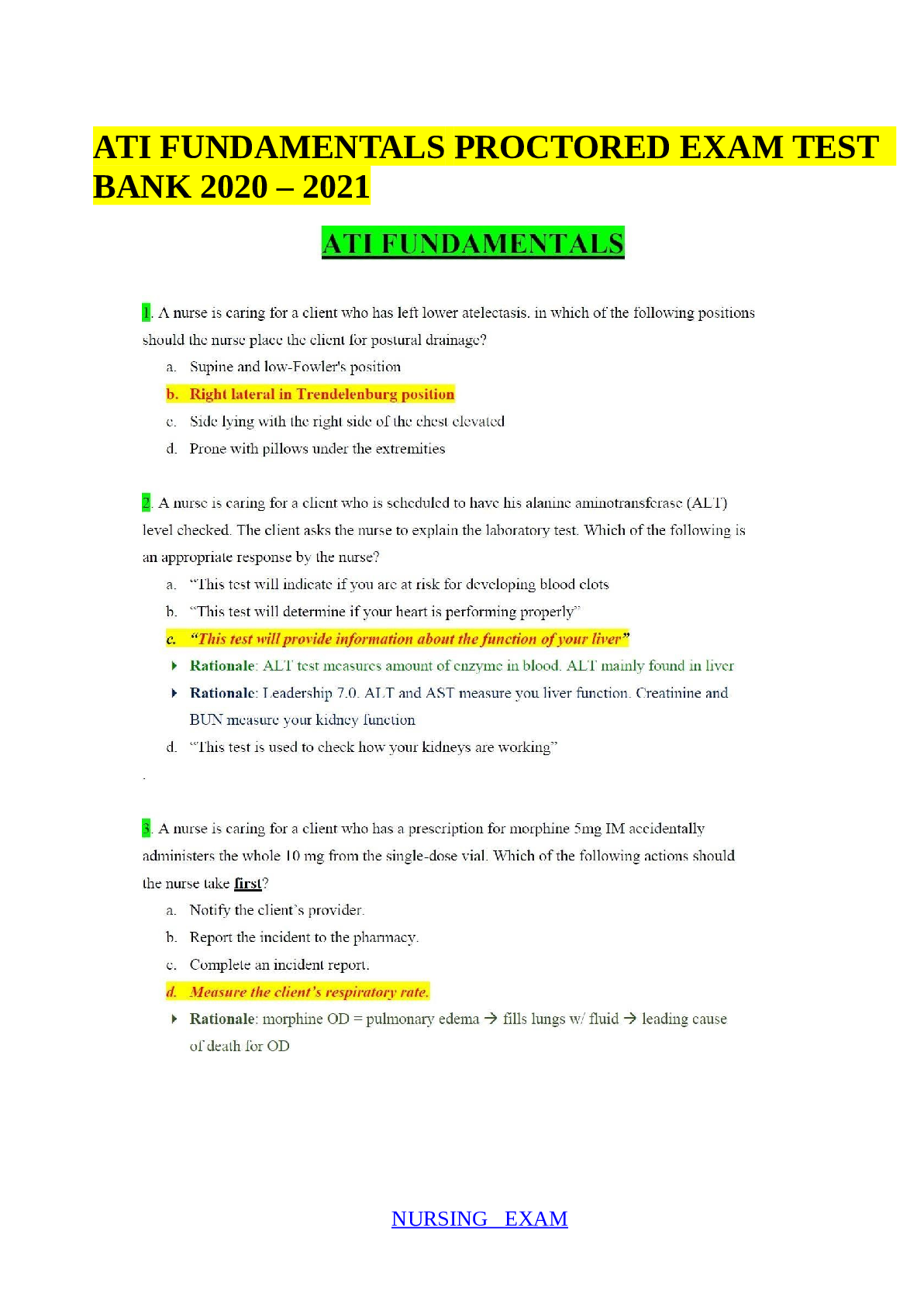

CS 234 Winter 2020 Assignment 1 Due: January 22 at 11:59 pm For submission instructions please refer to website. For all problems, if you use an existing result from either the literature or a ... textbook to solve the exercise, you need to cite the source. 1 Gridworld [15 pts] Consider the following grid environment. Starting from any unshaded square, you can move up, down, left, or right. Actions are deterministic and always succeed (e.g. going left from state 16 goes to state 15) unless they will cause the agent to run into a wall. The thicker edges indicate walls, and attempting to move in the direction of a wall results in staying in the same square (e.g. going in any direction other than left from state 16 stays in 16). Taking any action from the green target square (no. 12) earns a reward of rg (so r(12, a) = rg a) and ends the episode . Taking any action from the red square of death (no. 5) earns a reward of rr (so r(5, a) = rr a) and ends the episode. Otherwise, from every other square, taking any action is associated with a reward rs 1, 0, +1 (even if the action results in the agent staying in the same square). Assume the discount factor γ = 1, rg = +5, and rr = −5 unless otherwise specified. (a) (3pts) Define the value of rs that would cause the optimal policy to return the shortest path to the green target square (no. 12). Using this rs, find the optimal value for each square. Solution rs = -1. (b) (3pts) Lets refer to the value function derived in (a) as V πg and the policy as πg. Suppose we are now in a new gridworld where all the rewards (rs, rg, and rr) have +2 added to them. Consider still following πg of the original gridworld, what will the new values V πg be in this second gridworld? Solution (c) (3pts) Consider a general MDP with rewards, and transitions. Consider a discount factor of γ. For this case assume that the horizon is infinite (so there is no termination). A policy π in this MDP induces a value function V π (lets refer to this as V π ). Now suppose we have a new MDP where the only difference is that all rewards have a constant c added to them. Can you come up with an expression for the new value function V π induced by π in this second MDP in terms of V π , c, and γ? Solution ∞ oLd π new T =0 [Show More]

Last updated: 1 year ago

Preview 1 out of 11 pages

Reviews( 0 )

Document information

Connected school, study & course

About the document

Uploaded On

Dec 03, 2022

Number of pages

11

Written in

Additional information

This document has been written for:

Uploaded

Dec 03, 2022

Downloads

0

Views

51

.png)

.png)