Computer Science > EXAM REVIEW > Questions and Answers > Georgia Institute Of TechnologyCSE 6740 Final. CSE/ISYE 6740 Final Exam (202 (All)

Questions and Answers > Georgia Institute Of TechnologyCSE 6740 Final. CSE/ISYE 6740 Final Exam (2020 Fall)

Document Content and Description Below

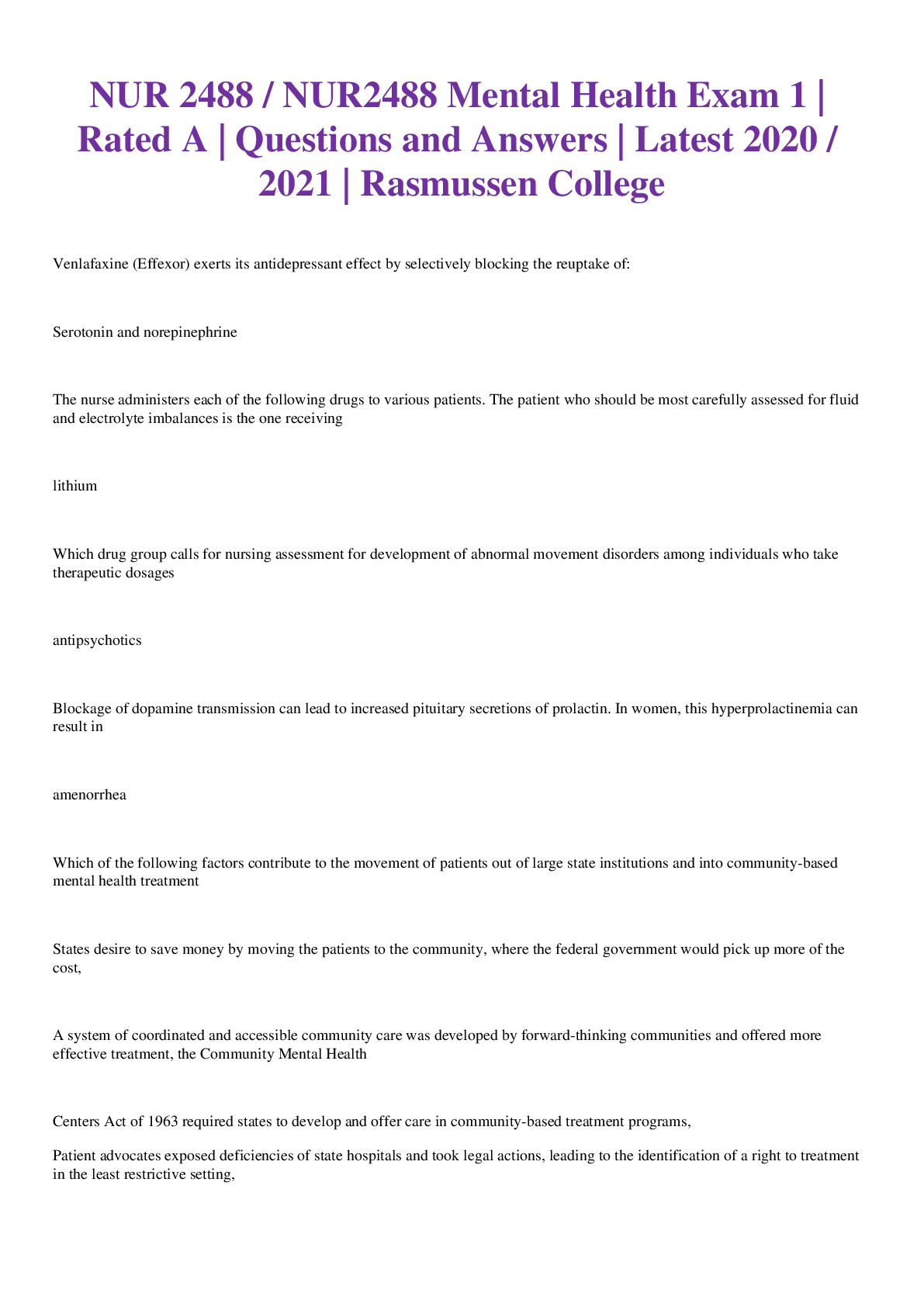

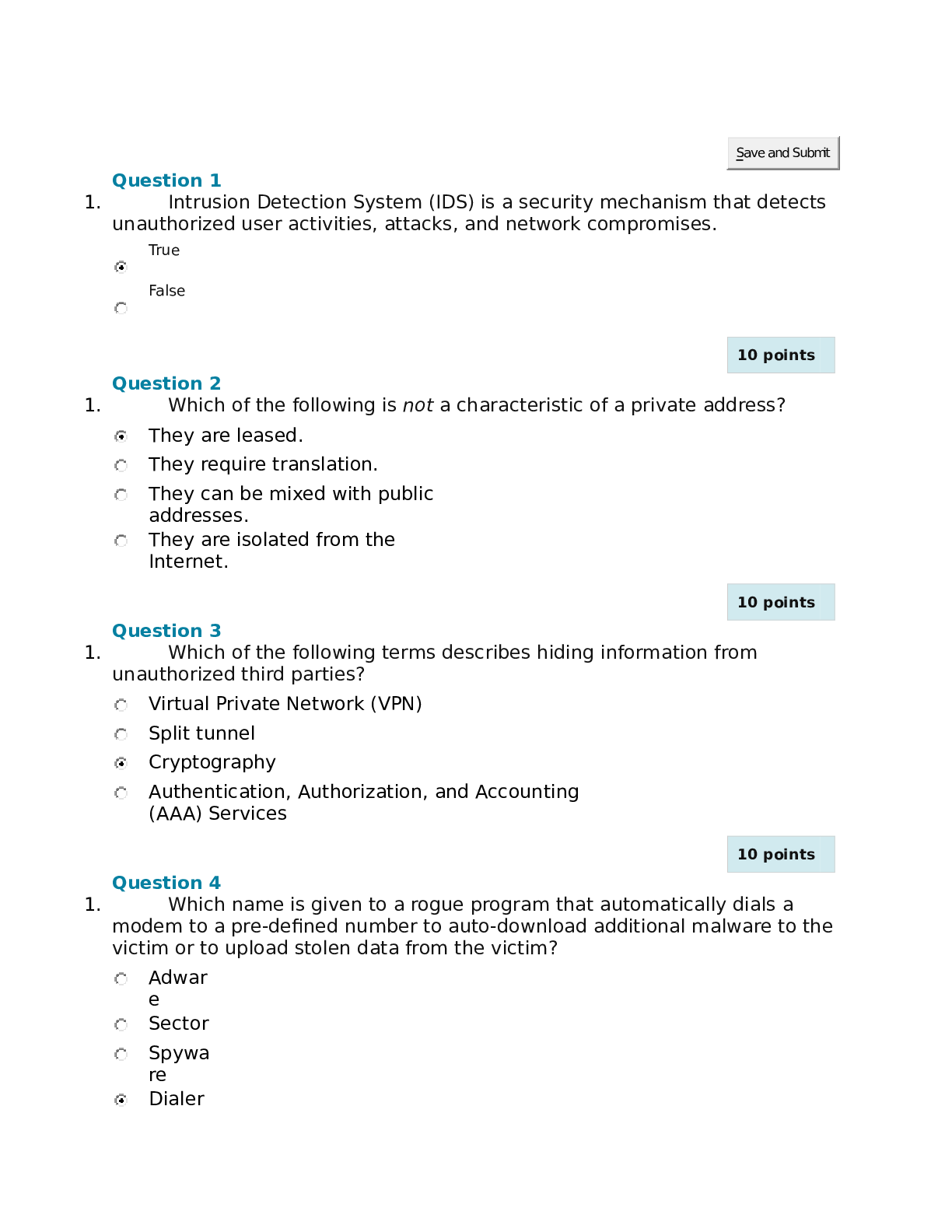

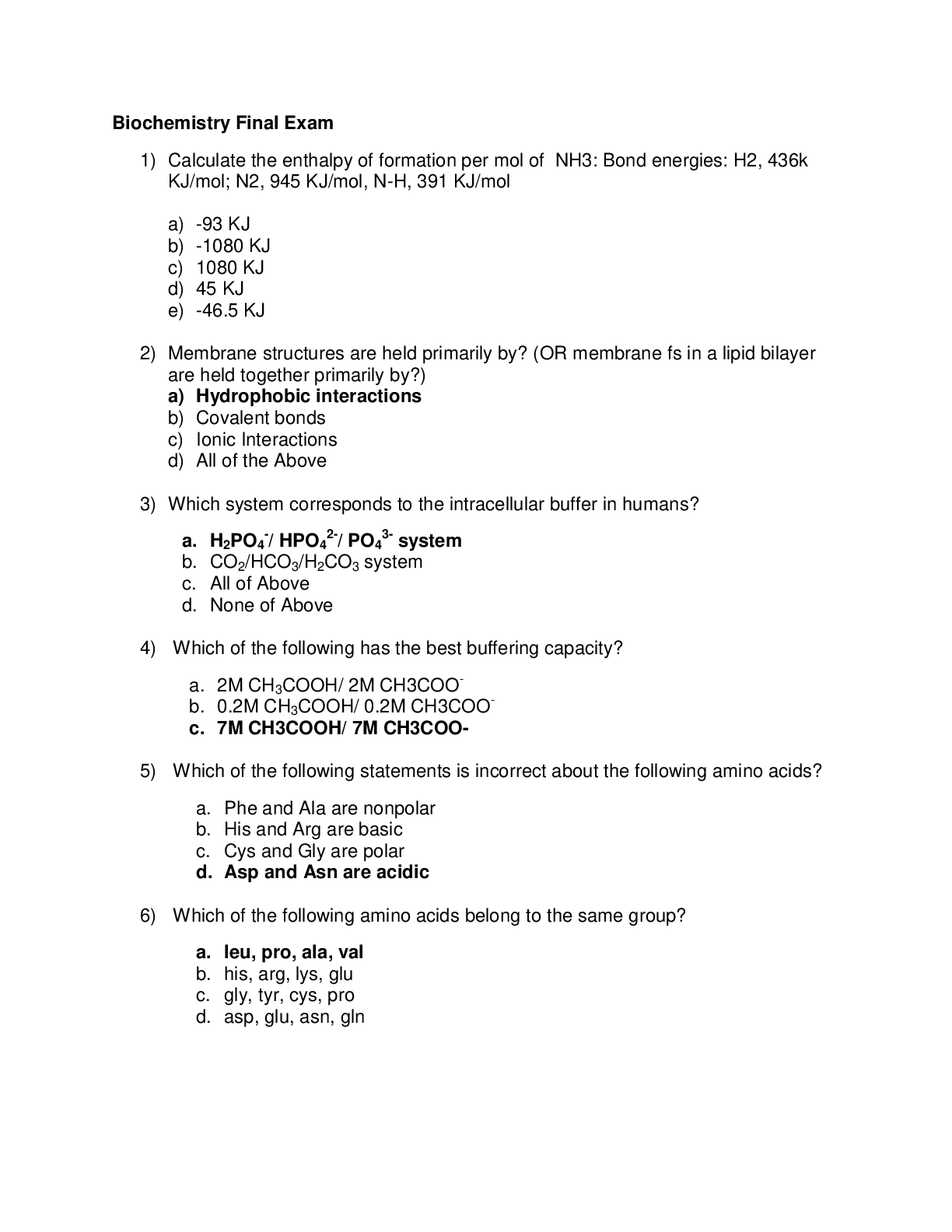

CSE/ISYE 6740 Final Exam (2020 Fall) • GT ID: jliu451 • E-mail: [email protected] Instructions: • Try your best to be clear as much as possible. No credit may be given to unreadable writing... . • The exam is open book and open note, but no electronic devices (including smart phones) are allowed. • Please ask for extra sheet if you are out of space. • Good luck! 1 1 A Big Picture [12 pts] We learned many machine learning methods during the semester. We listed some of them below: 1. spectral algorithm 2. K-medoids 3. Gaussian mixture 4. Histogram 5. K-nearest neighbor 6. K-means 7. Kernel density estimation 8. Linear regression 9. Logistic regression 10. Naive Bayes 11. Neural networks 12. Principal component analysis 13. Singular value decomposition 14. Support vector machines 15. isomap 2 (a) List all methods which are supervised learning method. [2 pts] (b) List all methods which are used for classification. [2 pts] (c) List all methods which are used for clustering. [2 pts] (d) List all methods which are used for density estimation. [2 pts] (e) List all methods which are linear classifiers. [2 pts] (f) List all methods which are used for dimension reduction. [2 pts] 2 Maximum Likelihood [14 pts] (a) [10 pts] The random variable x has a probability density function f(x) = ( for θ > 1. There are n observations xi, i = 1; : : : ; n, drawn independently from this distribution. (i) Write the cumulative distribution function of x. [3 pts] (ii) Derive the expected value of y. [3 pts] (iii) Write the maximum likelihood estimation of θ. [4 pts] 4 (b) [4 pts] Suppose that X is a discrete random variable with the following probability mass function: where 0 ≤ θ ≤ 1 is a parameter. The following 10 independent observations were taken from such a distribution: (-1,2,-1,2, 1,2,-1,0,2,-1). What is the maximum likelihood estimation of θ. 3 Naive Bayes Classification [14 pts] Consider a classification problem with the table of observations below. X1 and X2 are two binary random variables which are the observed variables. Y 1 are the class labels which is observed for the training data given below. We will use the Naive Bayes classifier to classify a new instance after training on the data below and compare the results. (a) Derive the Naive Bayes classification rule. [3 pts] Hint: at this step you do not need the data yet. 6 (b) Estimate the conditional probabilities P(X1jY 1) and P(X2jY 1). [4 pts] (c) Classify the point (1; 1) for class Y1. [3 pts] (d) Use the same training dataset and calculate the better splitting attribute to use as the first level attribute in constructing decision tree with entropy. [4 pts] 7 4 Hidden Markov Model [12 pts] Consider a Hidden Markov Model (HMM) as shown in figure with states Xt 2 f0; 1g, observations Ot 2 fA; Bg, and parameters in the following tables. Suppose that O1 = A and O2 = B is observed. 4.1 (a) Compute the probability that the HMM is in state 0 at time t = 2, i.e. P(X2 = 0). [6 pts] Hint: You don’t need to use the observations Ot to answer this question. 4.2 (b) Compute the probability that we observe O1 = A and O2 = A at time t = 2, i.e. P(X2; O1 = A; O2 = A). [6 pts] 8 Figure 1: Plot of points S1 = f(1; 4); (1; 5); (2; 4); (2; 5); (3; 3)g; S2 = f(3; 2); (3; 1); (4; 1); (5; 1); (6; 1); (6; 2)g 5 Support Vector Machines [22 pts] Given 2-dimensional input data points S1 = f(1; 4); (1; 5); (2; 4); (2; 5); (3; 3)g; S2 = f(3; 2); (3; 1); (4; 1); (5; 1); (6; 1); (6; 2)g, where S1 has the data points from the positive class and S2 has data points from the negative class: (a) [7 pts] Suppose you are using a linear SVM with no provision for noise (i.e. a Linear SVM that is trying to maximize its margin while ensuring all data points are on their correct sides of the margin). Draw three lines on the above diagram, showing classification boundary and the two sides of the margin. Circle the support vector(s). (b) [7 pts] Using the familiar LSVM classifiar notation of the classifier sign(wT x + b), calculate the values of w and b learned for part (a). 9 (c) [8 pts] Assume you are using a noise-tolerant Linear SVM which tries to optimize min using the notation in lecture slides. Question: is it possible to invent a dataset and a positive value of C in which the dataset is linearly seperable but the linear SVM classifier would never the less misclassify at least one training point? If it is possible to invent such an example, please sketch the example and suggest a value for C. If it is not possible, explain why not. 10 6 Graphical Models [12 pts] Consider a conditional random field (CRF) in full generality, with n observed variables and n latent variables which we package into vectors x and y respectively, and m1 + m2 parameters packaged into vectors θ and ! of lengths m1 and m2 respectively. We can write the distribution of this CRF as 6.1 (a) Explain why expressing the functions fj; 1 ≤ j ≤ m only in terms of yi and yi-1 and none of the other latent variables does not lose generality. [4 pts] 6.2 (b) Learning a CRF requires working with the log-likelihood. Compute the gradient of the log-likelihood with respect to θ. [8 pts] 11 7 Nearest Neighbor [14 pts] (a) Using Euclidean Distance as the distance metric, sketch the 1-nearest neighbor decision boundary for this dataset in Fig. 1 [4 pts] Figure 2: Dataset for KNN binary classification task 12 (b) What is a good value for k in this dataset? K=1 or K=3 or k=9. Explain your answer [2 pts] (c) In general, how to determine a good value for k to solve a particular classification problem. [2 pts] (d) Which will smooth the decision boundary of K-NN, increasing the value of K or decreasing the value of K? [2 pts] (e) Which classifier do you think would have a higher chance of doing well in terms of accuracy in Fig. 2: KNN (with K=1) or SVM (without using kernel)? Why? [2 pts] Figure 3 (f) Which classifier do you think would have a higher chance of doing well in terms of accuracy in Fig. 3: KNN (with K=1) or SVM (without using kernel)? Why? [2 pts] [Show More]

Last updated: 1 year ago

Preview 1 out of 16 pages

Reviews( 0 )

Document information

Connected school, study & course

About the document

Uploaded On

May 13, 2022

Number of pages

16

Written in

Additional information

This document has been written for:

Uploaded

May 13, 2022

Downloads

0

Views

39

ddtete3.png)