Computer Science > QUESTIONS & ANSWERS > Cloudera Certified Administrator for Apache Hadoop (All)

Cloudera Certified Administrator for Apache Hadoop

Document Content and Description Below

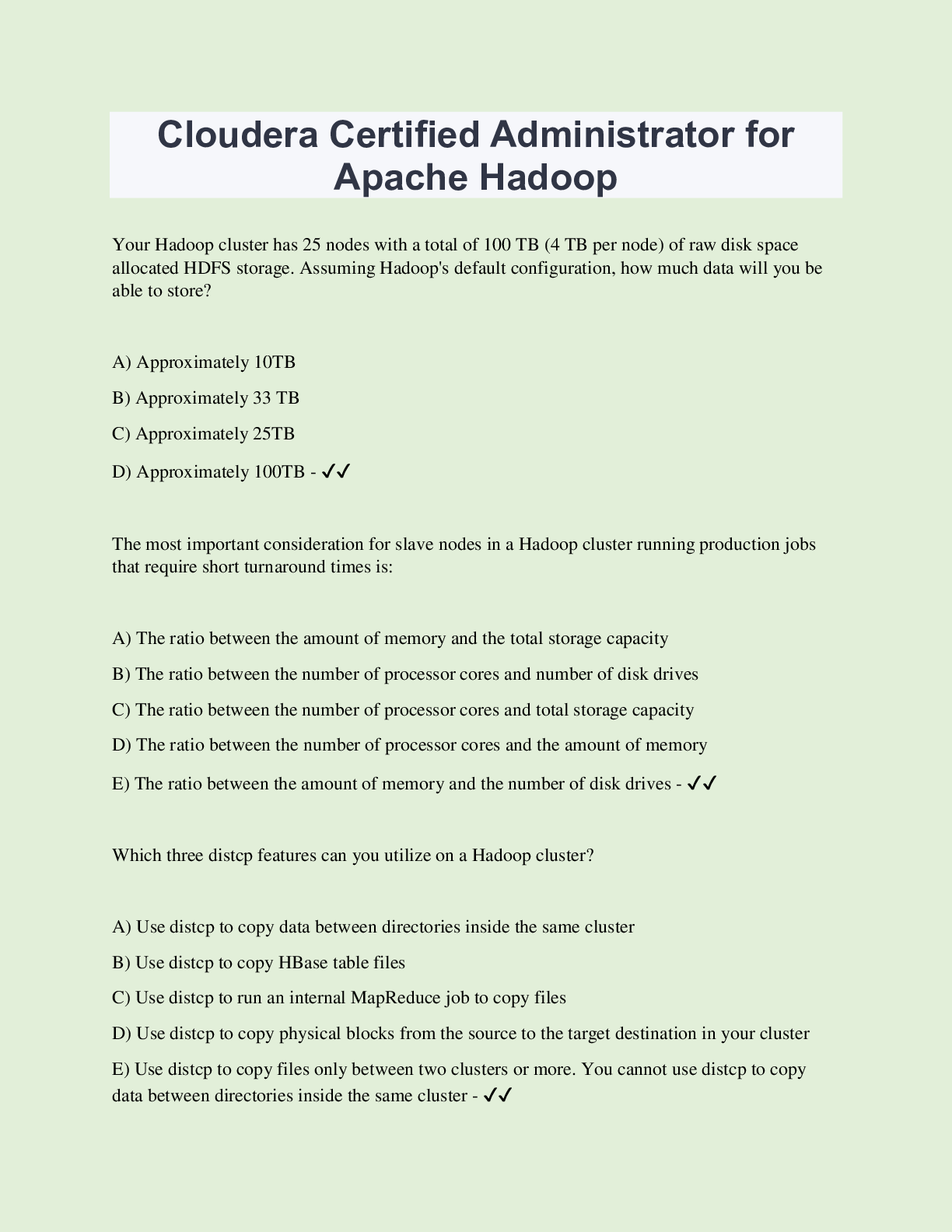

Cloudera Certified Administrator for Apache Hadoop Your Hadoop cluster has 25 nodes with a total of 100 TB (4 TB per node) of raw disk space allocated HDFS storage. Assuming Hadoop's default config... uration, how much data will you be able to store? A) Approximately 10TB B) Approximately 33 TB C) Approximately 25TB D) Approximately 100TB - ✔✔ The most important consideration for slave nodes in a Hadoop cluster running production jobs that require short turnaround times is: A) The ratio between the amount of memory and the total storage capacity B) The ratio between the number of processor cores and number of disk drives C) The ratio between the number of processor cores and total storage capacity D) The ratio between the number of processor cores and the amount of memory E) The ratio between the amount of memory and the number of disk drives - ✔✔ Which three distcp features can you utilize on a Hadoop cluster? A) Use distcp to copy data between directories inside the same cluster B) Use distcp to copy HBase table files C) Use distcp to run an internal MapReduce job to copy files D) Use distcp to copy physical blocks from the source to the target destination in your cluster E) Use distcp to copy files only between two clusters or more. You cannot use distcp to copy data between directories inside the same cluster - ✔✔ You have a cluster running 32 slave nodes and 3 master nodes running mapreduce V1 (MRv1). You execute the command: $ hadoop fsck / What four cluster conditions running this command will return to you? A) Blocks replicated improperly or that don't satisfy your cluster enhancement policy (e.g., too many blocks replicated on the same node) B) Under-replicated blocks C) The current state of the file system returned from scanning individual blocks on each datanode D) Number of datanodes E) Number of dead datanodes F) Configure capacity of your cluster The current state of the file system according to the namenode The location for every block - ✔✔ Your Hadoop cluster contains nodes in three racks. You have not configured the dfs.hosts property in the NameNode's configuration file. What happens? A) The NameNode will update the dfs.hosts property to include machines running the DataNode daemon on the next NameNode reboot or with the command dfsadmin -refreshNodes B) No new nodes can be added to the cluster until you specify them in the dfs.hosts file C) Any machine running the DataNode daemon can immediately join the cluster D) Presented with a blank d [Show More]

Last updated: 1 year ago

Preview 1 out of 16 pages

Buy this document to get the full access instantly

Instant Download Access after purchase

Add to cartInstant download

We Accept:

Reviews( 0 )

$10.00

Document information

Connected school, study & course

About the document

Uploaded On

Dec 14, 2022

Number of pages

16

Written in

Additional information

This document has been written for:

Uploaded

Dec 14, 2022

Downloads

0

Views

60

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)